How to Use AI in Software Testing: A Complete Guide

Did you know that 40% of testers are now using ChatGPT for test automation, and 39% of testing teams have reported efficiency gains through reduced manual effort and faster execution? These figures highlight the growing adoption of AI in software testing and its proven ability to improve productivity.

As businesses strive to accelerate development cycles while maintaining software quality, the demand for more efficient testing methods has risen substantially. This is where AI-driven testing tools come into play thanks to their capability to automate repetitive tasks, detect defects early, and improve test accuracy.

In this article, we’ll dive into the role of AI in software testing at length, from its use cases and advancements from manual software testing to how businesses can effectively implement AI-powered solutions.

What is AI in Software Testing?

As software systems become more complex, traditional testing methods are struggling to keep pace. A McKinsey study on embedded software in the automotive industry revealed that software complexity has quadrupled over the past decade. This rapid growth makes it increasingly challenging for testing teams to maintain software stability while keeping up with tight development timelines.

What is AI in Software Testing?

The adoption of artificial intelligence in software testing marks a significant shift in quality assurance. With the ability to utilize machine learning, natural language processing, and data analytics, AI-driven testing boosts precision, automates repetitive tasks, and even predicts defects before they escalate. Together, these innovations contribute to a more efficient and reliable testing process.

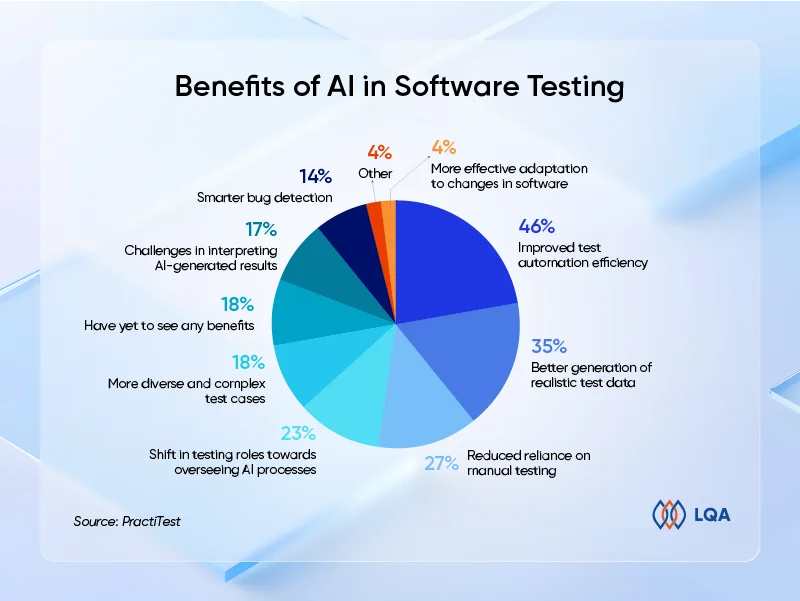

According to a survey by PractiTest, AI’s most notable benefits to software testing include improved test automation efficiency (45.6%) and the ability to generate realistic test data (34.7%). Additionally, AI is reshaping testing roles, with 23% of teams now overseeing AI-driven processes rather than executing manual tasks, while 27% report a reduced reliance on manual testing. However, AI’s ability to adapt to evolving software requirements (4.08%) and generate a broader range of test cases (18%) is still developing.

Benefits of AI in software testing

AI Software Testing vs Manual Software Testing

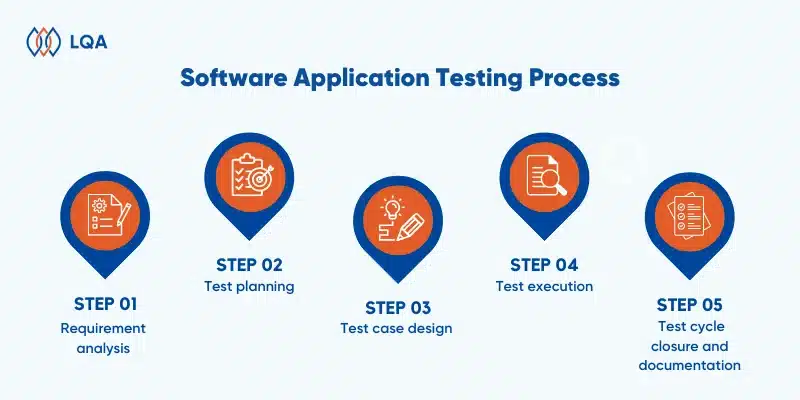

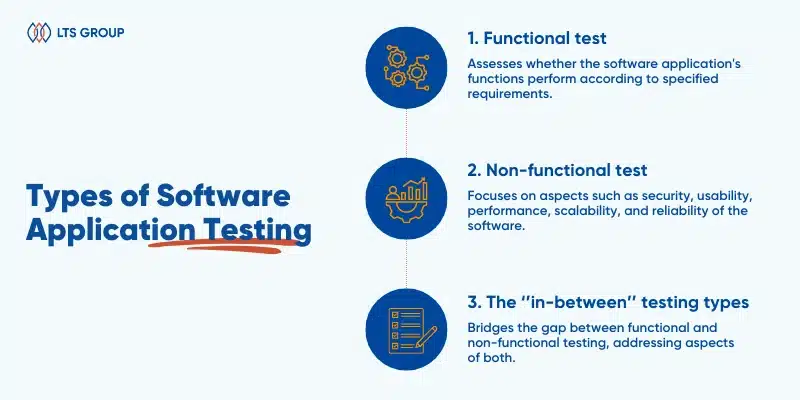

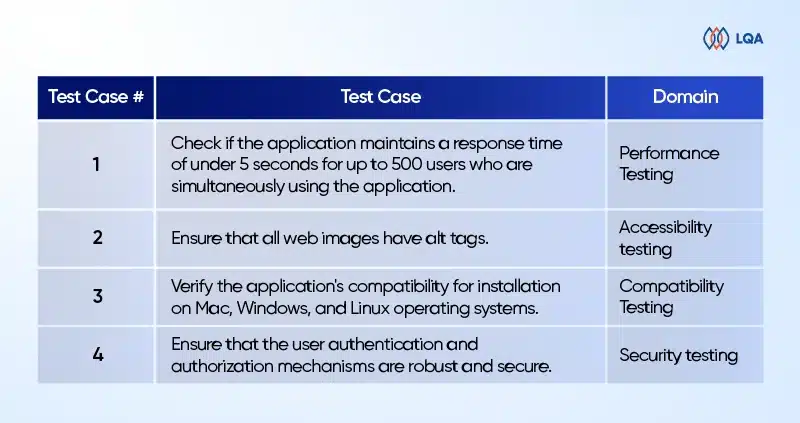

Traditional software testing follows a structured process known as the software testing life cycle (STLC), which comprises six main stages: requirement analysis, test planning, test case development, environment setup, test execution, and test cycle closure.

AI-powered testing operates within the same framework but introduces automation and intelligence to increase speed, accuracy, and efficiency. By integrating AI into the STLC, testing teams can achieve more precise results in less time. Here’s how AI transforms traditional STLC’s stages:

- Requirement analysis: AI evaluates stakeholder requirements and recommends a comprehensive test strategy.

- Test planning: AI creates a tailored test plan, focusing on areas with high-risk test cases and adapting to the organization’s unique needs.

- Test case development: AI generates, customizes, and self-heals test scripts, also providing synthetic test data as needed.

- Test cycle closure: AI assesses defects, forecasts trends, and automates the reporting process.

While AI brings significant advantages, manual testing remains irreplaceable in certain cases.

For a detailed look at the key differences between the two approaches, refer to the table below:

| Aspect | Manual testing | AI testing |

| Speed and efficiency | Time-consuming and needs significant human effort.

Best for exploratory, usability, and ad-hoc testing. |

Executes thousands of tests in parallel, reducing redundancy and optimizing efficiency.

Learns and improves over time. |

| Accuracy and reliability | Prone to human errors, inconsistencies, and fatigue. | Provides consistent execution, eliminates human errors, and predicts defects using historical data. |

| Test coverage | Limited by time and resources. Suitable for real-world scenario assessments that automated tools might miss. | Expands test coverage significantly, identifying high-risk areas and executing thousands of test cases within minutes. |

| Cost and resource | Requires skilled testers, leading to high long-term costs. Labor-intensive for large projects. Best for small-scale applications. | Reduces long-term expenses by minimizing manual effort. AI-driven testing automation tools automate test creation and execution, running continuously. |

| Test maintenance | Needs frequent updates and manual adjustments for every software change, increasing maintenance costs. | Self-healing test scripts automatically adjust to evolving applications, reducing maintenance efforts. |

| Scalability | Difficult to scale across multiple platforms, demanding additional testers for large projects. | Easily scalable with cloud-based execution, supporting parallel tests across different devices and browsers. Ideal for large-scale enterprise applications. |

Learn more: Automation testing vs. manual testing: Which is the cost-effective solution for your firm?

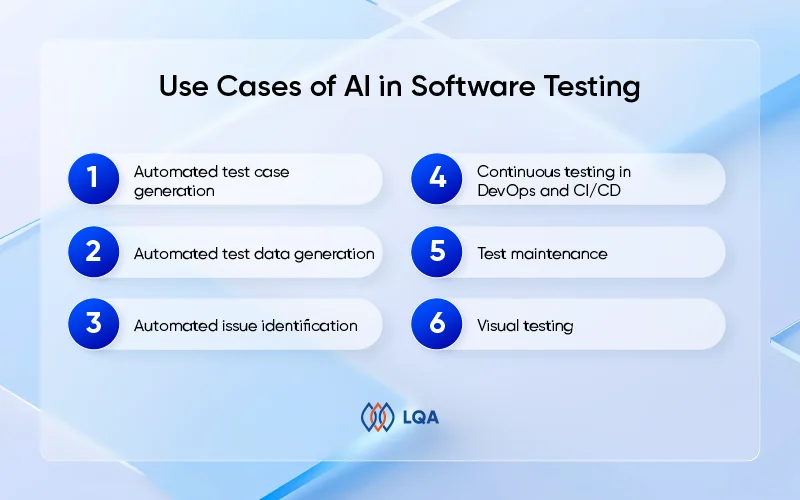

Use Cases of AI in Software Testing

According to the State of Software Quality Report 2024, test case generation is the most common AI application in both manual and automated testing, followed closely by test data generation.

Still, AI and ML can advance software testing in many other ways. Below are 5 key areas where these two technologies can make the biggest impact:

Use Cases of AI in Software Testing

Automated test case generation

Just like how basic coding tasks that once required human effort can now be handled by AI, in software testing, AI-powered tools can generate test cases based on given requirements.

Traditionally, automation testers had to write test scripts manually using specific frameworks, which required both coding expertise and continuous maintenance. As the software evolved, outdated scripts often failed to recognize changes in source code, leading to inaccurate test results. This created a significant challenge for testers working in agile environments, where frequent updates and rapid iterations demand ongoing script modifications.

With generative AI in software testing, QA professionals can now provide simple language prompts to instruct the chatbot to create test scenarios tailored to specific requirements. AI algorithms will then analyze historical data, system behavior, and application interactions to produce comprehensive test cases.

Automated test data generation

In many cases, using real-world data for software testing is restricted due to compliance requirements and data privacy regulations. AI-driven synthetic test data generation addresses this challenge by creating realistic, customized datasets that mimic real-world conditions while maintaining data security.

AI can quickly generate test data tailored to an organization’s specific needs. For example, a global company may require test data reflecting different regions, including address formats, tax structures, and currency variations. By automating this process, AI not only eliminates the need for manual data creation but also boosts diversity in test scenarios.

Automated issue identification

AI-driven testing solutions use intricate algorithms and machine learning to detect, classify, and prioritize software defects autonomously. This accelerates issue identification and resolution, ultimately improving software quality through continuous improvement.

The process begins with AI analyzing multiple aspects of the software, such as behavior, performance metrics, and user interactions. By processing large volumes of data and recognizing historical patterns, AI can pinpoint anomalies or deviations from expected functionality. These insights help uncover potential defects that could compromise the software’s reliability.

One of AI’s major advantages is its ability to prioritize detected issues based on severity and impact. By categorizing problems into different levels of criticality, AI enables testing teams to focus on high-risk defects first. This strategic approach optimizes testing resources, reduces the likelihood of major failures in production, and enhances overall user satisfaction.

Continuous testing in DevOps and CI/CD

AI plays a vital role in streamlining testing within DevOps and continuous integration/ continuous deployment (CI/CD) environments.

Once AI is integrated with DevOps pipelines, testing becomes an ongoing process that is seamlessly triggered with each code change. This means every time a developer pushes new code, AI automatically initiates necessary tests. This process speeds up feedback loops, providing instant insights into the quality of new code and accelerating release cycles.

Generally, AI’s ability to automate test execution after each code update allows teams to release software updates more frequently and with greater confidence, improving time-to-market and product quality.

Test maintenance

Test maintenance, especially for web and user interface (UI) testing, can be a significant challenge. As web interfaces frequently change, test scripts often break when they can no longer locate elements due to code updates. This is particularly problematic when test scripts interact with web elements through locators (unique identifiers for buttons, links, images, etc.).

In traditional testing approaches, maintaining these test scripts can be time-consuming and resource-intensive. Artificial intelIigence brings a solution to this issue. When a test breaks due to a change in a web element’s locator, AI can automatically fetch the updated locator so that the test continues to run smoothly without requiring manual intervention.

If this process is automated, AI will considerably reduce the testing team’s maintenance workload and improve testing efficiency.

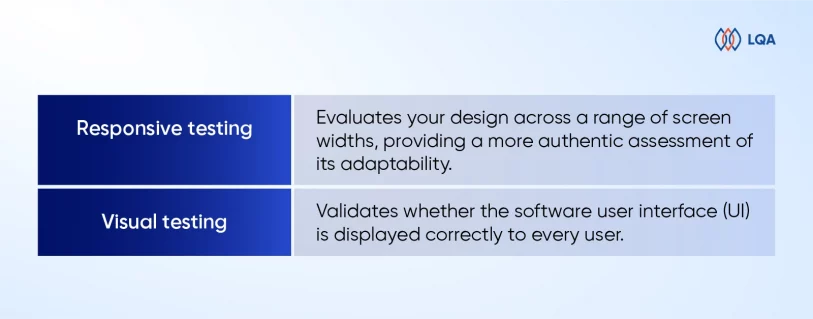

Visual testing

Visual testing has long been a challenge for software testers, especially when it comes to comparing how a user interface looks before and after a launch. Previously, human testers relied on their eyes to spot any visual differences. Yet, automation introduces complications – computers detect even the slightest pixel-level variations as visual bugs, even when these inconsistencies have no real impact on user experience.

AI-powered visual testing tools overcome these limitations by analyzing UI changes in context rather than rigidly comparing pixels. These tools can:

- Intelligently ignore irrelevant changes: AI learns which UI elements frequently update and excludes them from unnecessary bug reports.

- Maintain UI consistency across devices: AI compares images across multiple platforms and detects significant inconsistencies.

- Adapt to dynamic elements: AI understands layout and visual adjustments, making sure they enhance rather than disrupt user experience.

How to Use AI in Software Testing?

Intrigued to dive deeper to start integrating AI into your software testing processes? Find out below.

How to Use AI in Software Testing

Step 1. Identify areas where AI can improve software testing

Before incorporating AI into testing processes, decision-makers must pinpoint the testing areas that stand to benefit the most.

Here are a few ideas to get started with:

- Automated test case generation

- Automated test data generation

- Automated issue identification

- Continuous testing in DevOps and CI/CD

- Test maintenance

- Visual testing

Once these areas are identified, set clear objectives and success metrics for AI adoption. There are some common goals like increasing test coverage, test execution speed, and defect detection rates

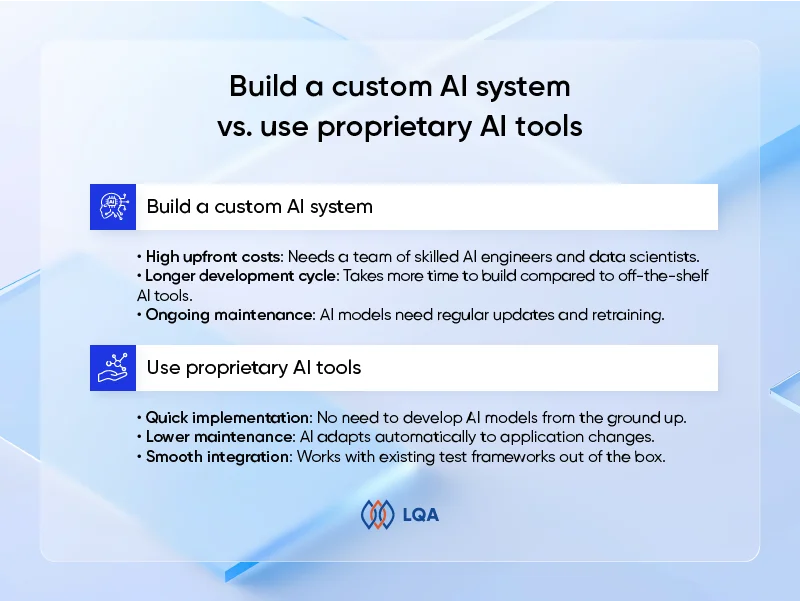

Step 2. Choose between building from scratch or using proprietary AI tools

The next step is to choose whether to develop a custom AI solution or adopt a ready-made AI-powered testing tool.

The right choice depends on the organization’s resources, long-term strategy, and testing requirements.

Here’s a quick look at these 2 methods:

Build a custom AI system or use proprietary AI tools?

Build a custom AI system

In-house development allows for a personalized AI solution that meets specific business needs. However, this approach requires significant investment and expertise:

- High upfront costs: Needs a team of skilled AI engineers and data scientists.

- Longer development cycle: Takes more time to build compared to off-the-shelf AI tools.

- Ongoing maintenance: AI models need regular updates and retraining.

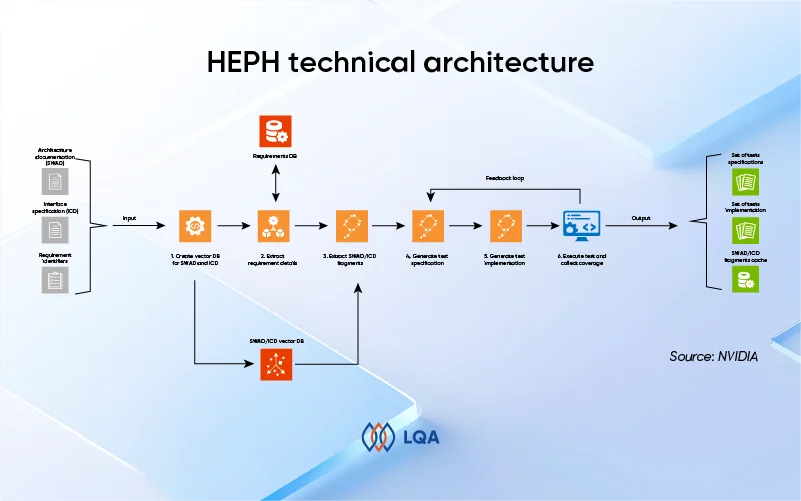

Case study: NVIDIA’s Hephaestus (HEPH)

The DriveOS team at NVIDIA developed Hephaestus, an internal generative AI framework to automate test generation. HEPH simplifies the design and implementation of integration and unit tests by using large language models for input analysis and code generation. This greatly reduces the time spent on creating test cases while boosting efficiency through context-aware testing.

How does HEPH work?

HEPH takes in software requirements, software architecture documents (SWADs), interface control documents (ICDs), and test examples to generate test specifications and implementations for the given requirements.

HEPH technical architecture

The test generation workflow includes the following steps:

- Data preparation: Input documents such as SWADs and ICDs are indexed and stored in an embedding database, which is then used to query relevant information.

- Requirements extraction: Requirement details are retrieved from the requirement storage system (e.g., Jama). If the input requirements lack sufficient information for test generation, HEPH automatically connects to the storage service, locates the missing details, and downloads them.

- Data traceability: HEPH searches the embedding database to establish traceability between the input requirements and relevant SWAD and ICD fragments. This step creates a mapped connection between the requirements and corresponding software architecture components.

- Test specification generation: Using the verification steps from the requirements and the identified SWAD and ICD fragments, HEPH generates both positive and negative test specifications, delivering complete coverage of all aspects of the requirement.

- Test implementation generation: Using the ICD fragments and the generated test specifications, HEPH creates executable tests in C/C++.

- Test execution: The generated tests are compiled and executed, with coverage data collected. The HEPH agent then analyzes test results and produces additional tests to cover any missing cases.

Use proprietary AI tools

Rather than crafting a custom AI solution, many organizations opt for off-the-shelf AI automation tools, which come with pre-built capabilities like self-healing tests, AI-powered test generation, detailed reporting, visual and accessibility testing, LLM and chatbot testing, and automated test execution videos.

These tools prove to be beneficial in numerous aspects:

- Quick implementation: No need to develop AI models from the ground up.

- Lower maintenance: AI adapts automatically to application changes.

- Smooth integration: Works with existing test frameworks out of the box.

Some of the best QA automation tools powered by AI available today are Selenium, Code Intelligence, Functionize, Testsigma, Katalon Studio, Applitools, TestCraft, Testim, Mabl, Watir, TestRigor, and ACCELQ.

Each tool specializes in different areas of software testing, from functional and regression testing to performance and usability assessments. To choose the right tool, businesses should evaluate:

- Specific testing needs: Functional, performance, security, or accessibility testing.

- Integration & compatibility: Whether the tool aligns with current test frameworks.

- Scalability: Ability to handle growing testing demands.

- Ease of use & maintenance: Learning curve, automation efficiency, and long-term viability.

Also read: Top 10 trusted automation testing tools for your business

Step 3. Measure performance and refine

If a business chooses to develop an in-house AI testing tool, it must then be integrated into the existing test infrastructure for smooth workflows. Once incorporated, the next step is to track performance to assess its effectiveness and identify areas for improvement.

Here are 7 key performance metrics to monitor:

- Test execution coverage

- Test execution rate

- Defect density

- Test failure rate

- Defect leakage

- Defect resolution time

- Test efficiency

Learn more: Essential QA metrics with examples to navigate software success

Following that, companies need to use performance insights to refine their AI software testing tools or adjust their software testing strategies accordingly. Fine-tuning algorithms and reconfiguring workflows are some typical actions to take for optimal AI-driven testing results.

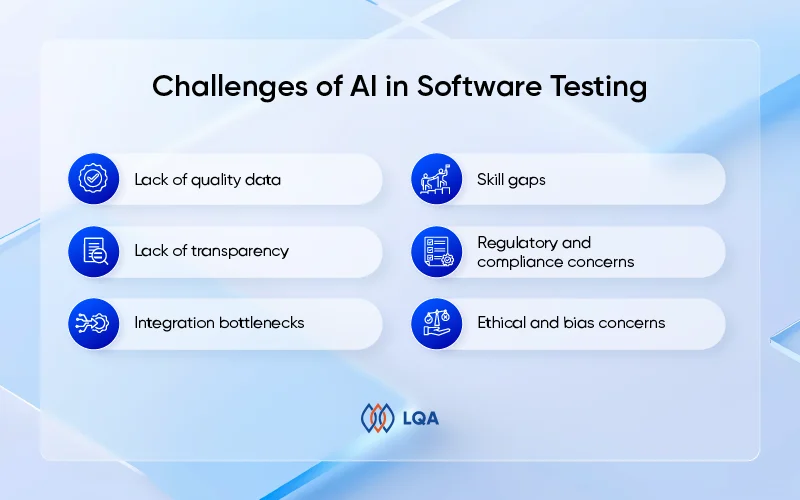

Challenges of AI in Software Testing

Challenges of AI in software testing

- Lack of quality data

AI models need large volumes of high-quality data to make accurate predictions and generate meaningful results.

But, in software testing, gathering sufficient and properly labeled data can be a huge challenge.

If the data used to train AI models is incomplete, inconsistent, or poorly structured, the AI tool may produce inaccurate results or fail to identify edge cases.

These data limitations can also hinder the AI’s ability to predict bugs effectively, resulting in missed defects or false positives.

The need for continuous data management and governance is crucial to make sure AI models can function at their full potential.

- Lack of transparency

One of the key challenges with advanced AI models, particularly deep learning systems, is their “black-box” nature.

These models often do not provide clear explanations about how they arrive at specific conclusions or decisions. For example, testers may find it difficult to understand why an AI model flags a particular bug, prioritizes certain test cases, or chooses a specific path in test execution.

This lack of transparency can create trust issues among testing teams, who may hesitate to rely on AI-generated insights without clear explanations.

Plus, without transparency, it becomes difficult for teams to troubleshoot or fine-tune AI predictions, which may ultimately slow down the adoption of AI-driven testing.

- Integration bottlenecks

Integrating AI-based testing tools with existing testing frameworks and workflows can be a complex and time-consuming process.

Many organizations already use well-established DevOps pipelines, CI/CD workflows, and manual testing protocols.

Introducing AI tools into these processes often requires significant customization for smooth interaction with legacy systems.

In some cases, AI tools for testing may need to be completely reconfigured to function within a company’s existing infrastructure. This can lead to delays in deployment and require extra resources, especially in large, established organizations where systems are deeply entrenched.

As a result, businesses must carefully evaluate the compatibility of AI tools with their existing processes to minimize friction and maximize efficiency.

- Skill gaps

Another major challenge is the shortage of in-house expertise in AI and ML. Successful implementation of AI in testing software demands not only a basic understanding of AI principles but also advanced knowledge of data analysis, model training, and optimization.

Many traditional QA professionals may not have the skills necessary to configure, refine, or interpret AI models, making the integration of AI tools a steep learning curve for existing teams.

Companies may thus need to invest in training or hire specialists in AI and ML to bridge this skills gap.

Learn more: Develop an effective IT outsourcing strategy

- Regulatory and compliance concerns

Industries such as finance, healthcare, and aviation are governed by stringent regulations that impose strict rules on data security, privacy, and the transparency of automated systems.

AI models, particularly those used in testing, must be configured to adhere to these industry-specific standards.

For example, AI tools used in healthcare software testing must comply with HIPAA regulations to protect sensitive patient data.

These regulatory concerns can complicate AI adoption, as businesses may need to have their AI tools meet compliance standards before they can be deployed for testing.

- Ethical and bias concerns

AI models learn from historical data, which means they are vulnerable to biases present in that data.

If the data used to train AI models is skewed or unrepresentative, it can result in biased predictions or unfair test prioritization.

To mitigate these risks, it’s essential to regularly audit AI models and train them with diverse and representative data.

FAQs about AI in Software Testing

How is AI testing different from manual software testing?

AI testing outperforms manual testing in speed, accuracy, and scalability. While manual testing is time-consuming, prone to human errors, and limited in coverage, AI testing executes thousands of tests quickly with consistent results and broader coverage. AI testing also reduces long-term costs through automation, offering self-healing scripts that adapt to software changes. In contrast, manual testing requires frequent updates and more resources, making it less suitable for large-scale projects.

How is AI used in software testing?

AI is used in software testing to automate key processes such as test case generation, test data creation, and issue identification. It supports continuous testing in DevOps and CI/CD pipelines, delivering rapid feedback and smoother workflows. AI also helps maintain tests by automatically adapting to changes in the application and performs visual testing to detect UI inconsistencies. This leads to improved efficiency, faster execution, and higher accuracy in defect identification.

Will AI take over QA?

No, AI will not replace QA testers but will enhance their work. While AI can automate repetitive tasks, detect patterns, and even predict defects, software quality assurance goes beyond just running tests, it requires critical thinking, creativity, and contextual understanding, which are human strengths.

Ready to Take Software Testing to the Next Level with AI?

There is no doubt that AI has transformed software testing – from automated test cases and test data generation to continuous testing within DevOps and CI/CD pipelines.

Implementing AI in software testing starts with identifying key areas for improvement, then choosing between custom-built solutions or proprietary tools, and ends with continuously measuring performance against defined KPIs.

With that being said, successful software testing with AI isn’t without challenges. Issues like data quality, transparency, integration, and skill gaps can hinder progress. That’s why organizations must proactively address these obstacles for a smooth transition to AI-driven testing.

At LQA, our team of experienced testers combines well-established QA processes with innovative AI-infused capabilities. We use cutting-edge AI testing tools to seamlessly integrate intelligent automation into our systems, bringing unprecedented accuracy and operational efficiency.

Reach out to LQA today to empower your software testing strategy and drive quality to the next level.

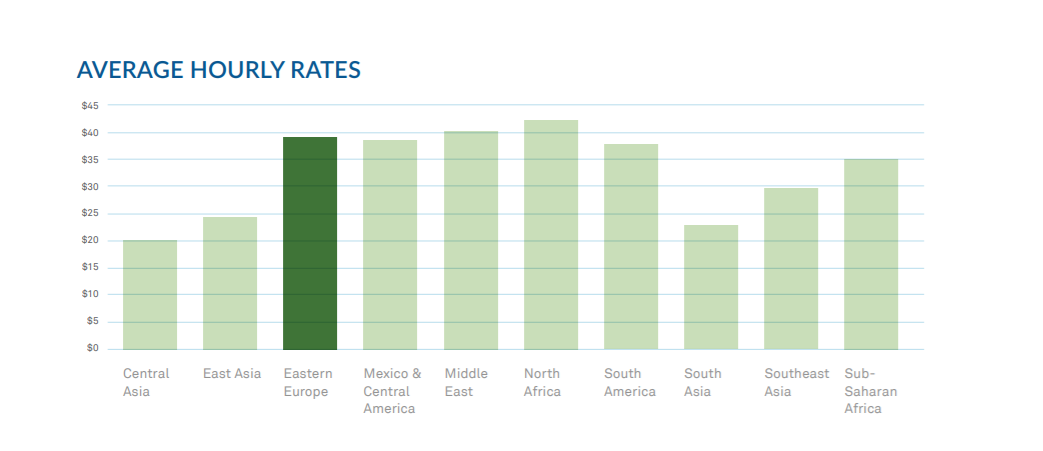

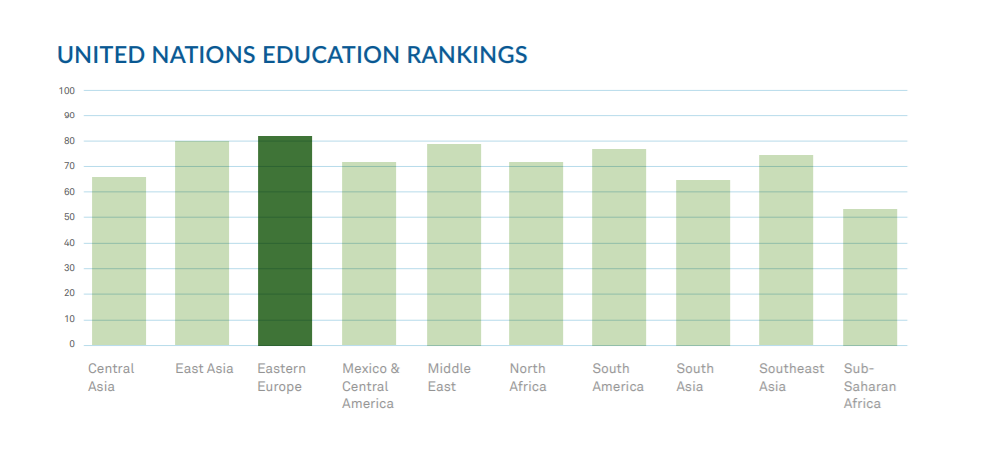

This is exacerbated by brain drain in many countries since many of the most experienced engineers may move on to other more promising regions. Eastern Europe suffered from a bit of brain drain in years past, but for the most part there are adequate opportunities available for software professionals and no need to leave to find work. The presence of so many seasoned professionals also feeds the IT ecosystem, which we’ll look into later in the report.

This is exacerbated by brain drain in many countries since many of the most experienced engineers may move on to other more promising regions. Eastern Europe suffered from a bit of brain drain in years past, but for the most part there are adequate opportunities available for software professionals and no need to leave to find work. The presence of so many seasoned professionals also feeds the IT ecosystem, which we’ll look into later in the report.