How to Perform Native App Testing: A Complete Walkthrough

Native apps are known for their high performance, seamless integration with device features, and superior user experience compared to hybrid or web apps. But even the most well-designed native app can fail if it isn’t thoroughly tested. Bugs, compatibility issues, or performance lags can lead to poor reviews and user drop-off.

In this article, we’ll walk businesses through the purpose and methodologies of native app testing, explore different types of tests, and outline the key criteria to look for in a trusted native app testing partner.

By the end, companies will gain the insights needed to manage external testing teams with confidence and drive better app outcomes.

Now, let’s start!

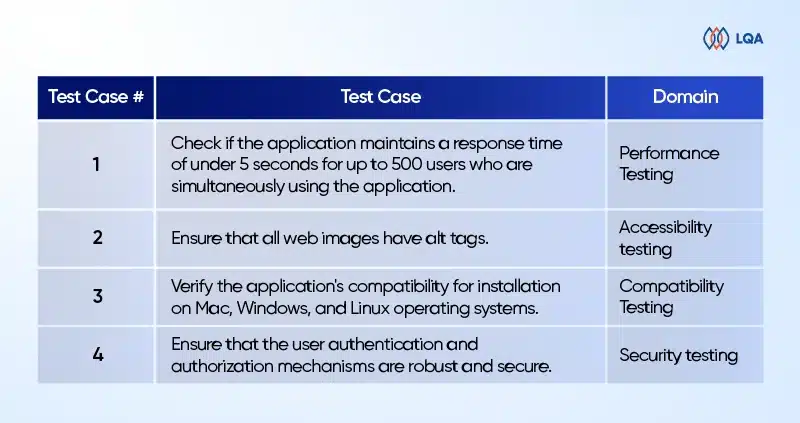

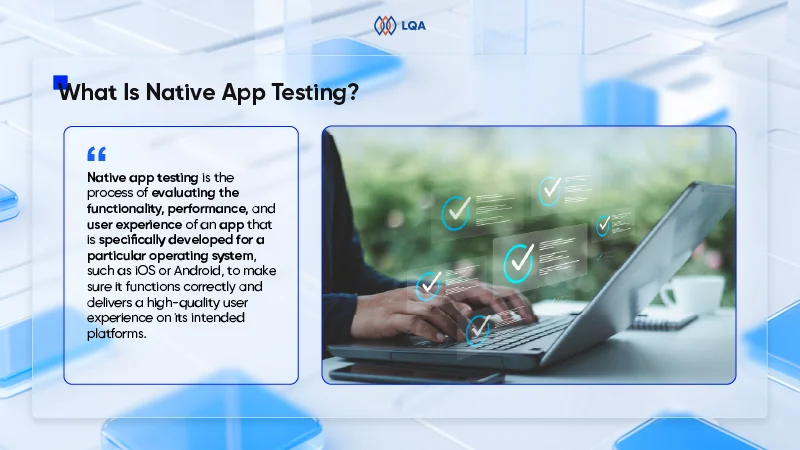

What Is Native App Testing?

Native app testing is the process of evaluating the functionality, performance, and user experience of an app that is specifically developed for a particular operating system, such as iOS or Android, to make sure it functions correctly and delivers a high-quality user experience on its intended platforms. These apps are referred to as “native” because they are designed to take full advantage of the features and capabilities of a specific OS.

Definition of native app testing

The purpose of native app testing is to determine whether native applications work correctly on the platform for which they are intended, evaluating their functionality, performance, usability, and security.

Through robust testing, this minimizes the risk of critical issues and enhances the app’s success in a competitive app market.

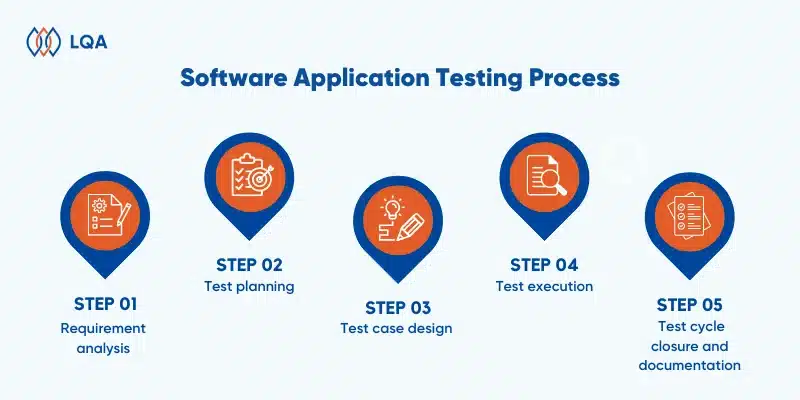

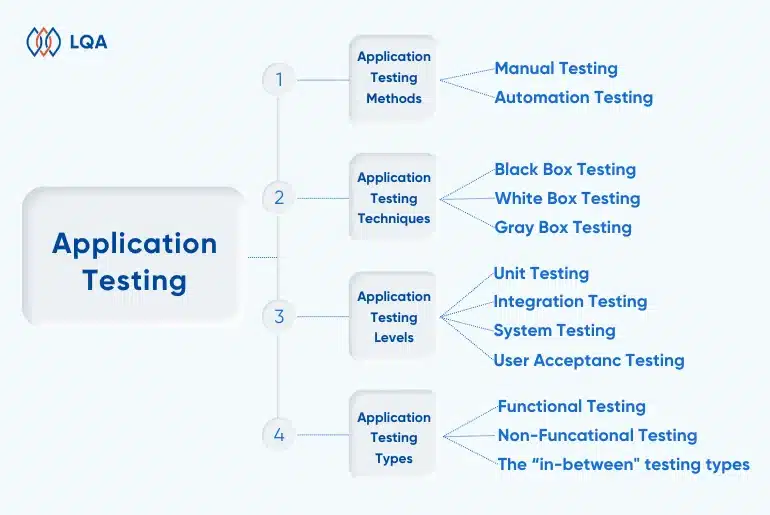

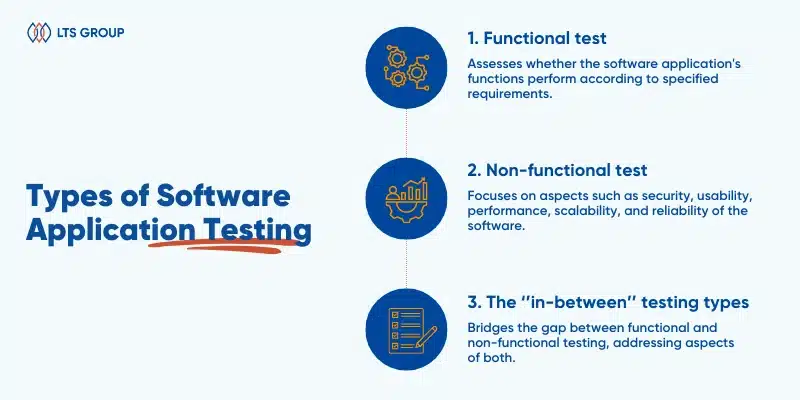

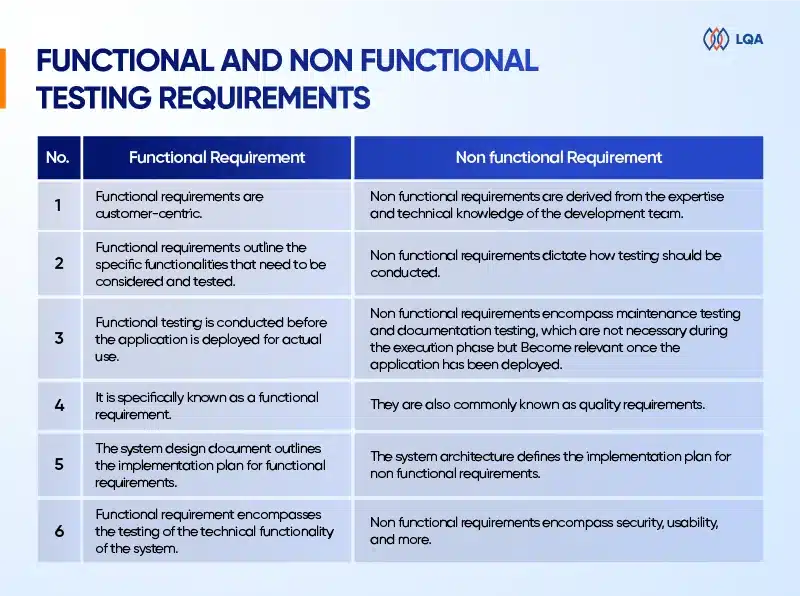

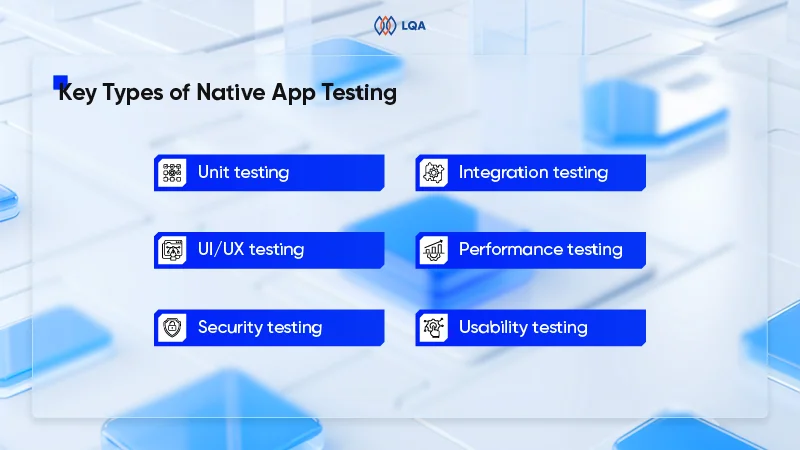

Key Types of Native App Testing

Key types of native app testing

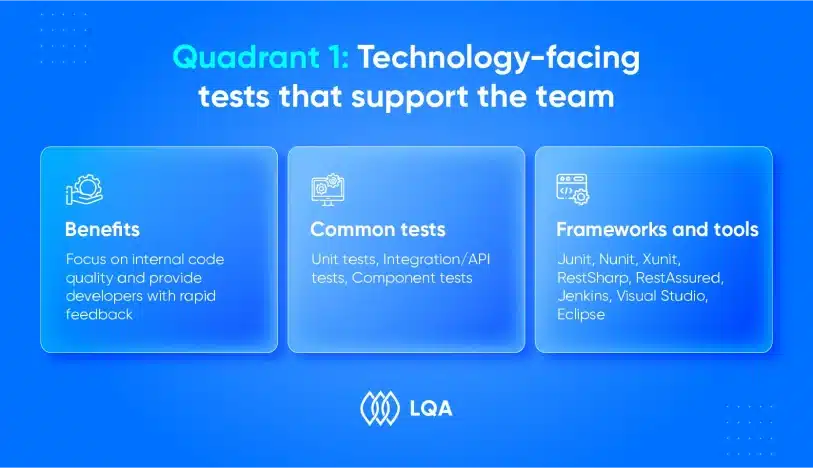

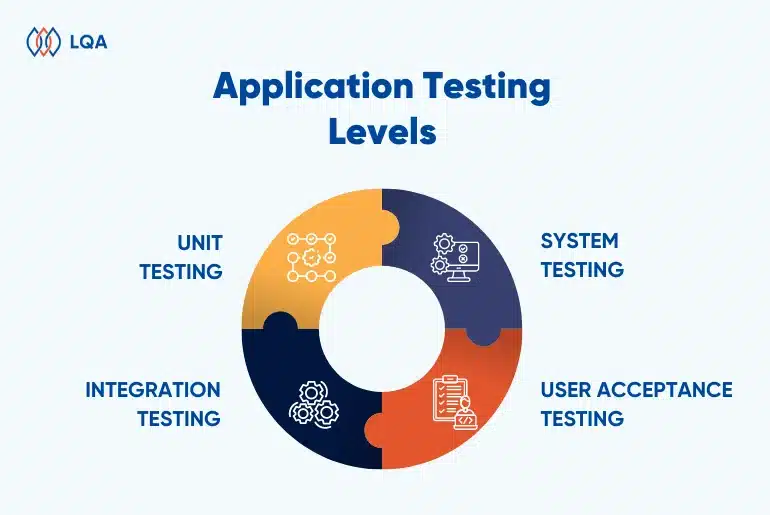

Unit testing

- Purpose: Verifies that individual functions or components of the application work correctly in isolation.

- Why it matters: Detecting and fixing issues at the unit level helps reduce downstream bugs and improves code stability early in the development cycle.

Integration testing

- Purpose: Checks how different modules of the app work together – like APIs, databases, and front-end components.

- Why it matters: It helps identify communication issues between components, preventing system failures that can disrupt core user flows.

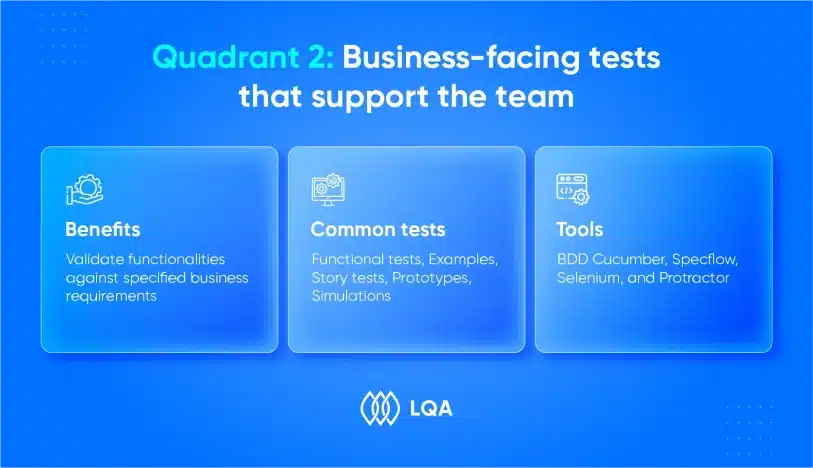

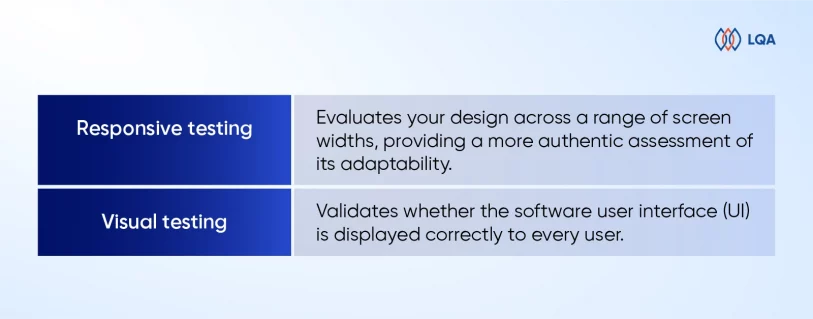

UI/UX testing

- Purpose: Evaluates how the app looks and feels to users – layouts, buttons, animations, and screen responsiveness.

- Why it matters: A consistent and intuitive interface enhances user satisfaction and directly impacts adoption and retention rates.

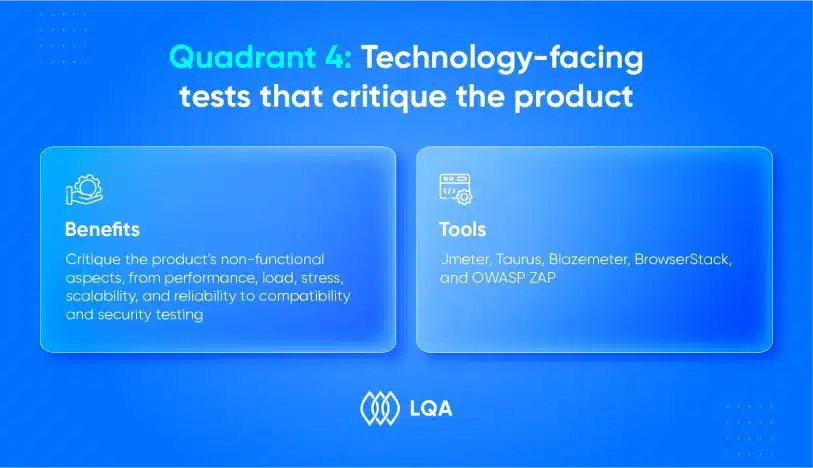

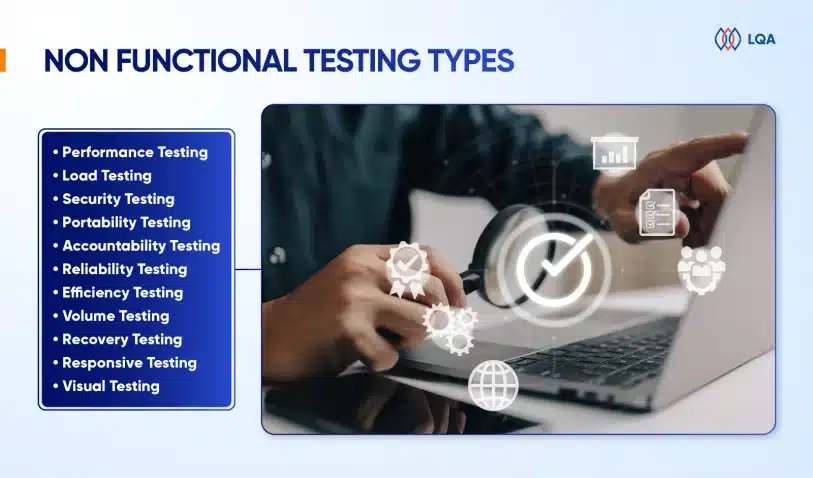

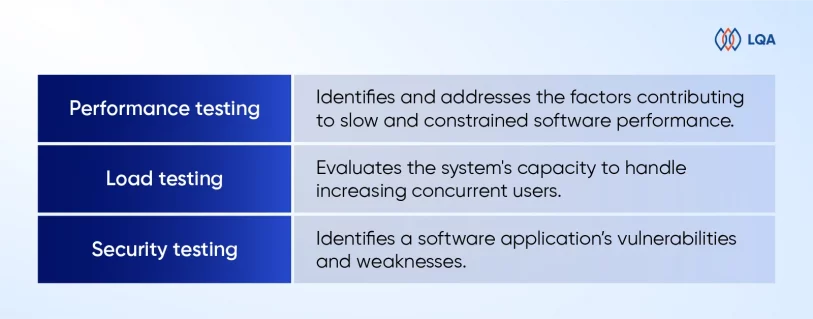

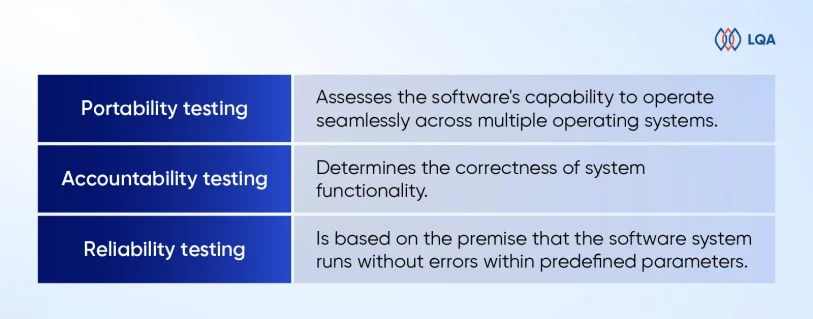

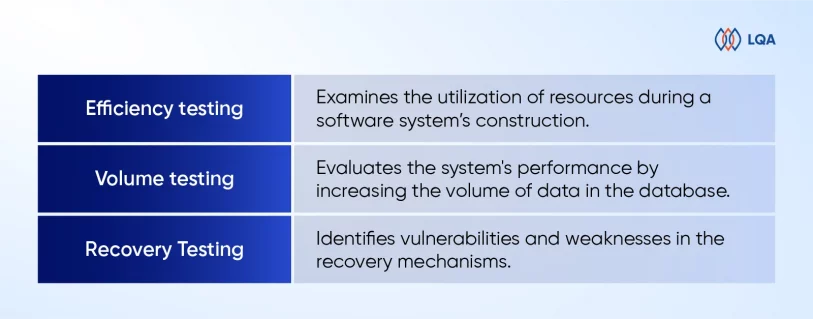

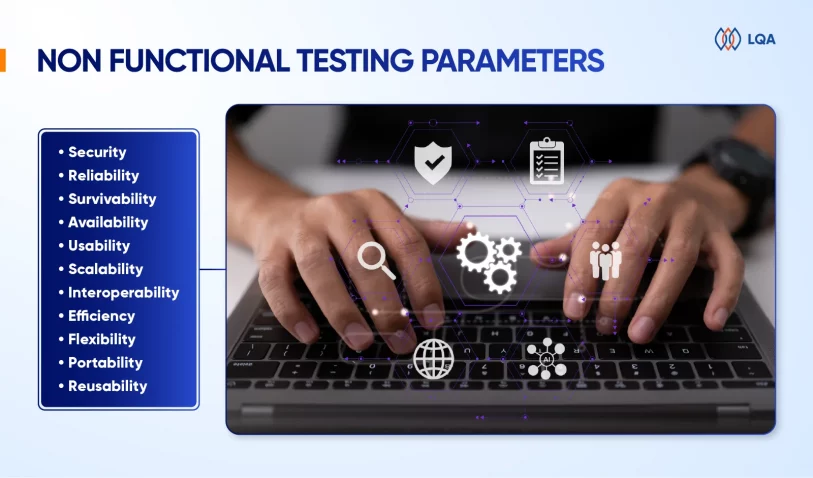

Performance testing

- Purpose: Tests speed, responsiveness, and stability under different network conditions and device loads.

- Why it matters: Ensuring smooth performance minimizes app crashes and load delays, both of which are key factors in maintaining user engagement.

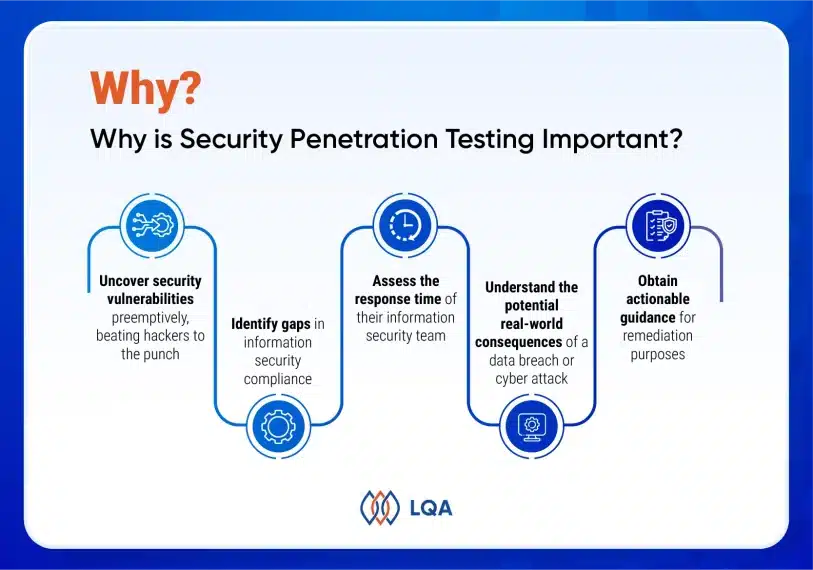

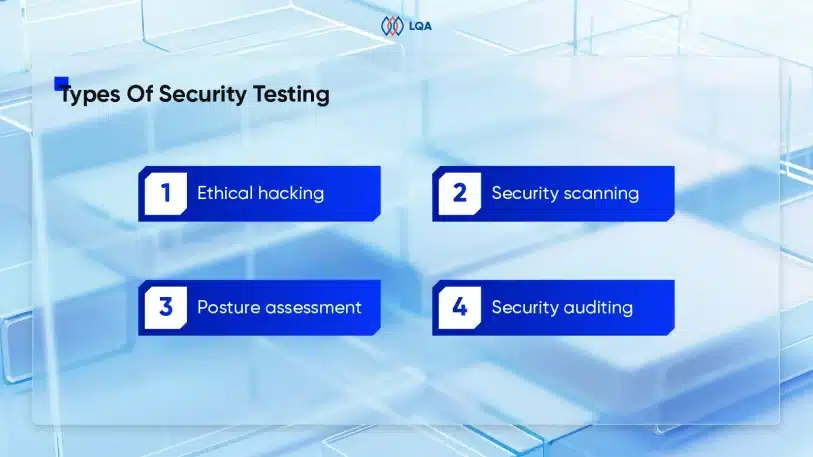

Security testing

- Purpose: Assesses how well the app protects sensitive data and resists unauthorized access or breaches.

- Why it matters: Addressing security gaps is essential to protect sensitive information, meet compliance requirements, and maintain user trust.

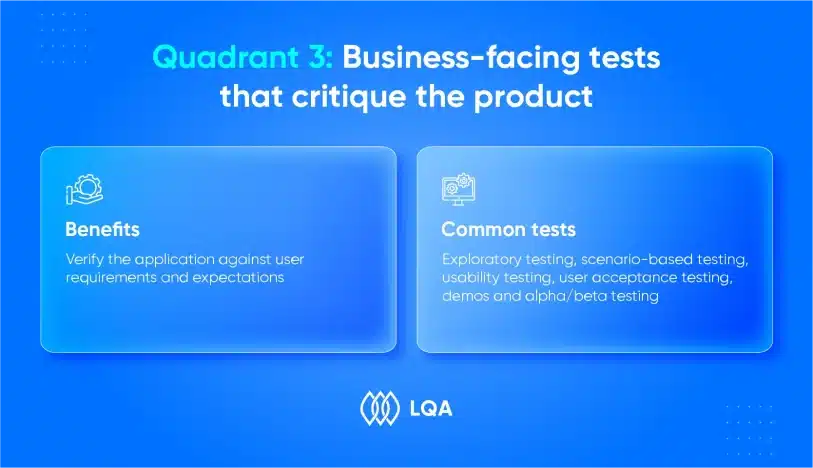

Usability testing

- Purpose: Gathers real feedback from users to identify friction points, confusing flows, or overlooked design flaws.

- Why it matters: Feedback from usability testing guides design improvements and ensures that the app aligns with user expectations and behaviors.

Learn more: Software application testing: Different types & how to do?

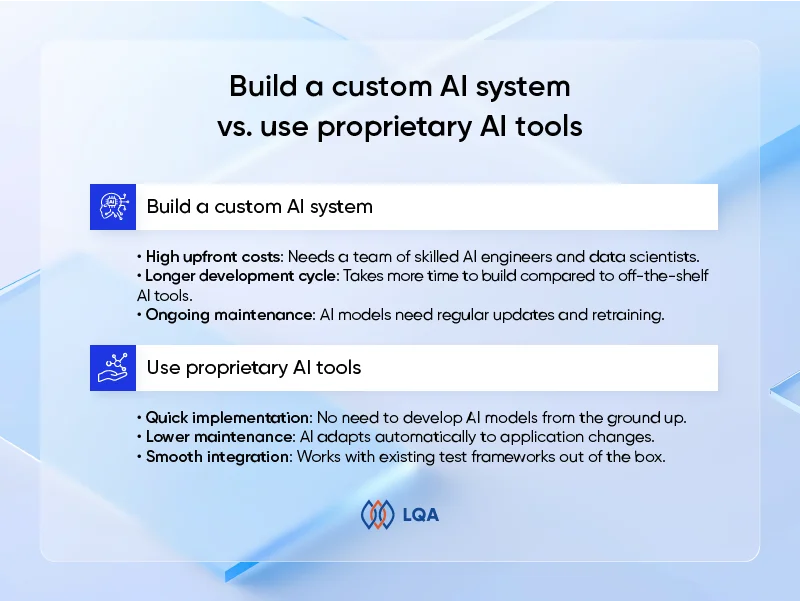

Choosing the Right Approach to Native App Testing: In-House, Outsourced, or Hybrid?

One of the most strategic decisions in native app development is determining how testing will be handled. The approach taken can significantly affect not only time-to-market but also product quality, development efficiency, and long-term scalability.

Choose the right approach to native app testing

In-house testing

In-house testing involves building a dedicated QA team within the organization. This approach offers deep integration between testers and developers, fostering immediate feedback loops and domain knowledge retention.

Maintaining in-house teams makes sense for enterprises or tech-first startups planning frequent updates and long-term support.

Best fit for:

- Companies developing complex or security-sensitive apps (e.g., fintech, healthcare) require strict control over IP and data.

- Organizations with established development and QA teams capable of building and maintaining internal infrastructure.

- Long-term products with frequent feature updates and the need for cross-functional collaboration between teams.

In-house testing

Challenges:

- High cost of QA talent acquisition and retention, particularly for senior test engineers with mobile expertise.

- Requires significant upfront investment in devices, testing labs, and automation tools.

- May face resource bottlenecks during high-demand development cycles unless teams are over-provisioned.

Outsourced testing

With outsourced testing, businesses partner with QA vendors to handle testing either partially or entirely.

This model not only reduces operational burden but also gives businesses quick access to experienced testers, broad device coverage, and advanced tools. In fact, 57% of executives cite cost reduction as the primary reason for outsourcing, particularly through staff augmentation for routine IT tasks.

Best fit for:

- Startups or SMEs lacking internal QA resources are seeking cost-effective access to mobile testing expertise.

- Projects that require short-term testing capacity or access to specialized skills like performance testing, accessibility, or localization.

- Businesses looking to accelerate time-to-market without sacrificing testing depth.

Challenges:

- Reduced visibility and control over daily test execution and issue resolution timelines.

- Coordination challenges due to time zone or cultural differences (especially in offshore models).

- Requires due diligence to ensure vendor quality, security compliance, and confidentiality (e.g., NDAs, secure environments).

Outsourced testing

Hybrid model

The hybrid approach for testing allows companies to retain strategic oversight while extending QA capabilities through external partners. In this setup, internal QA handles core feature testing and critical flows, while external teams take care of regression, performance, or multi-device testing.

Best fit for:

- Organizations that want to retain strategic control over core testing (e.g., test design, critical modules) while outsourcing repetitive or specialized tasks.

- Apps with variable testing workloads, such as cyclical releases or seasonal feature spikes.

- Companies scaling up who need to balance cost and flexibility without compromising on quality.

Challenges:

- Needs strong project management and alignment mechanisms to coordinate internal and external teams.

- Risk of inconsistent quality standards unless test plans, tools, and reporting are well integrated.

- May involve longer onboarding to align both sides on tools, workflows, and business logic.

Hybrid model

5 Must-Have Criteria for a Trusted Native App Testing Partner

While every business has its own unique needs, there are key qualities that any reliable native app testing partner should consistently deliver. Below, we break down the 5 essential criteria that an effective software testing partner must meet and explain why they matter.

Choose a trusted native app testing partner

Proven experience in native app testing

A testing partner’s experience should extend beyond general QA into deep, hands-on expertise in native mobile environments. Native app testing demands unique familiarity with OS-level APIs, device hardware integration, and platform-specific performance constraints, whether it’s iOS/Android for mobile or Windows/macOS for desktop.

- For mobile, this means understanding how apps behave under different OS versions, permission models, battery usage constraints, and device-specific behaviors (e.g., Samsung vs. Pixel).

- For desktop, experience with native frameworks like Win32, Cocoa, or Swift is critical, especially for apps relying on GPU usage, file system access, or local caching.

Businesses should see case studies or proof points in your industry or use case, such as finance, healthcare, or e-commerce, where reliability, compliance, or UX is critical.

Certifications like ISTQB, ASTQB-Mobile, or Google Developer Certifications reinforce credibility, especially when combined with real-world results .

Robust infrastructure and real device access

A trusted testing partner must offer access to a wide range of real devices and system environments that reflect the business’s actual user base across both mobile and desktop platforms. This includes varying operating systems, screen sizes, hardware specs, and network conditions. Unlike limited simulations, testing on real devices ensures accurate performance insights and reduces post-launch issues.

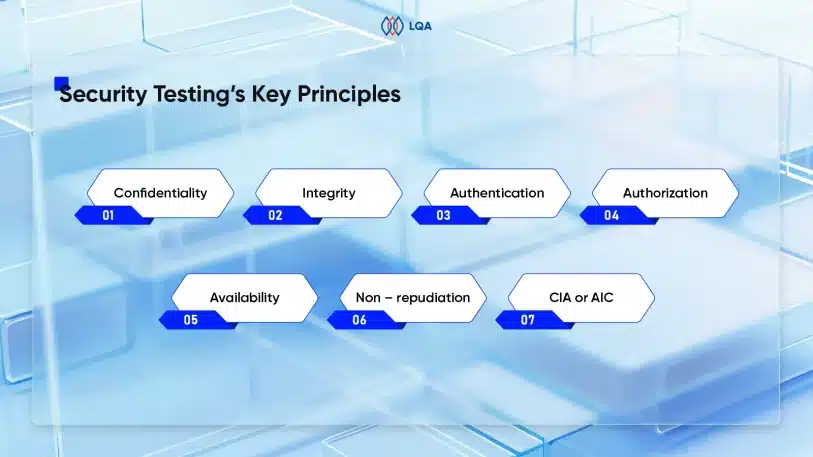

Security, compliance, and confidentiality

Given the sensitive nature of app data, the native app testing partner must adhere to strict security standards and compliance frameworks (e.g., ISO 27001, SOC 2, GDPR).

More than just certification, this means implementing security-conscious testing environments that prevent data leaks, applying techniques like data masking or anonymization during production-like tests, and enforcing strict protocols such as signed NDAs, role-based access, and secure handling of test assets and code.

It’s also important to note that native desktop apps often interact more deeply with a system’s file structure or network stack than mobile apps do, which increases the surface area for security vulnerabilities.

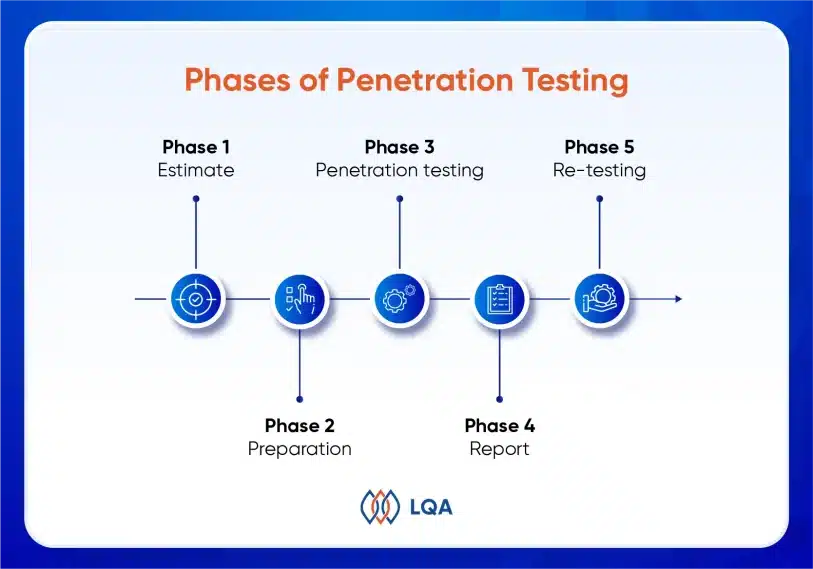

Communication and collaboration practices

Clear, consistent communication is essential when working with an external testing partner. Businesses should expect regular updates on progress, test results, and issues so they can stay informed and make timely decisions. The partner should follow a structured process for planning, executing, and retesting and be responsive when priorities shift.

They also need to work smoothly within companies’ existing tools and workflows, whether that’s Jira for tracking or Slack for quick updates. Good collaboration helps avoid delays, improves visibility, and keeps your product moving forward efficiently.

Scalability and business alignment

An effective testing partner must offer the ability to scale resources in line with evolving product demands, whether ramping up for major releases or optimizing during low-activity phases. Flexible scaling guarantees efficient use of time and budget without compromising test coverage.

Equally important is the partner’s alignment with broader business objectives. Testing processes should reflect the development pace, release cadence, and quality benchmarks of the product. A well-aligned partner contributes not only to immediate project goals but also to long-term product success and market readiness.

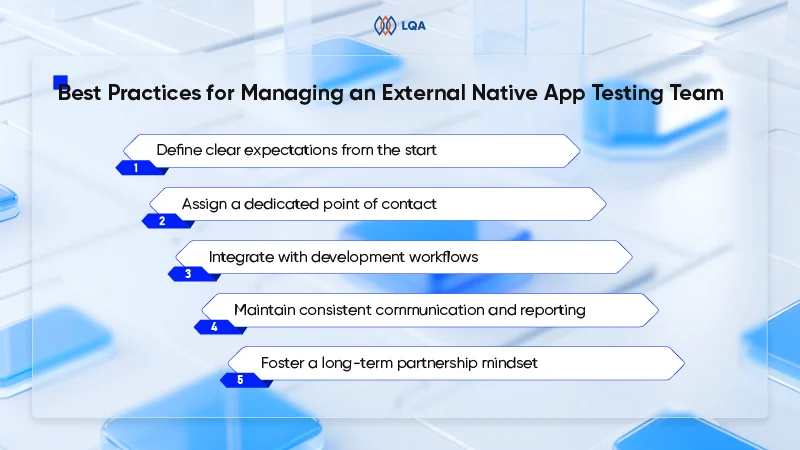

Best Practices for Managing An External Native App Testing Team

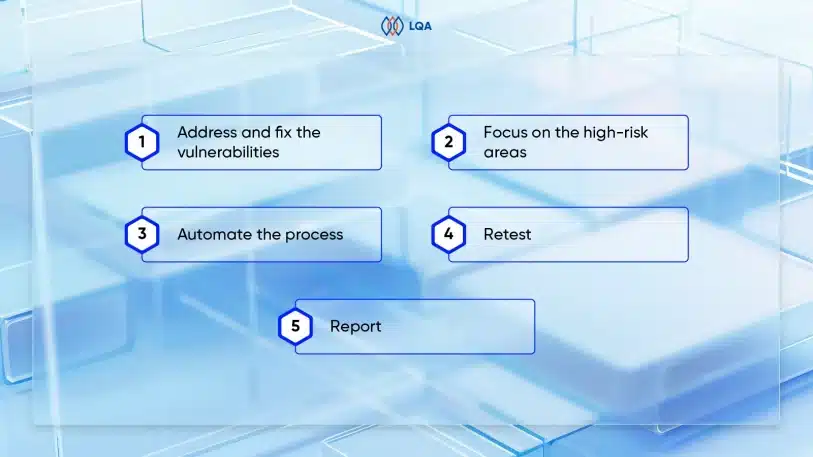

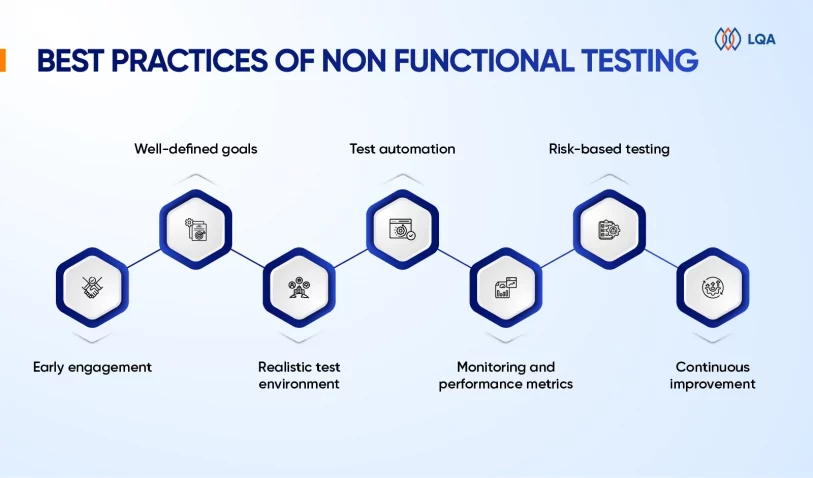

For businesses exploring outsourced native app testing, effective team management is key to turning that investment into measurable outcomes. The 5 practices below help establish alignment, reduce friction, and unlock real value from the partnership.

Manage an external native app testing team

Define clear expectations from the start

A productive partnership begins with a clearly defined scope of work. Outline key performance indicators (KPIs), testing coverage objectives, timelines, and preferred communication channels from the outset.

Make sure the external testing team understands the product’s business goals, user profiles, and high-risk areas, whether it’s data sensitivity, user load, or platform-specific edge cases. Early alignment helps eliminate confusion, reduces the risk of missed expectations, and makes it easier to track progress against measurable outcomes.

Assign a dedicated point of contact

Appointing a liaison on both sides helps reduce miscommunication and speeds up decision-making. This role is responsible for managing test feedback loops, flagging blockers, and facilitating coordination across internal and external teams.

Integrate with development workflows

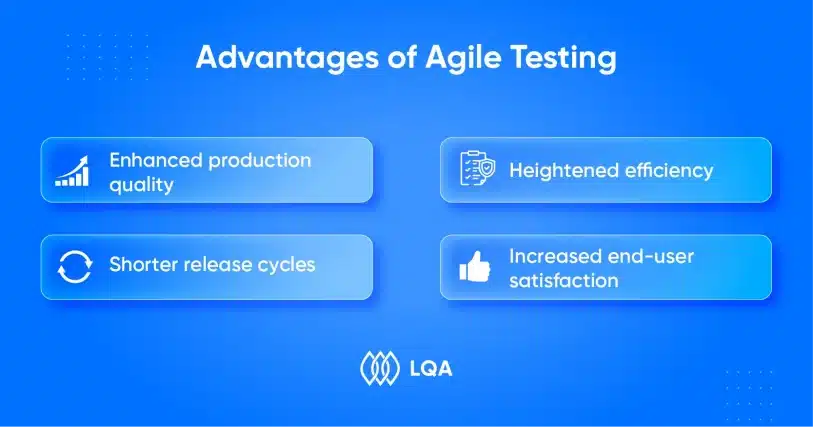

Embedding QA professionals within Agile teams enhances collaboration and accelerates issue resolution. When testers are involved from the outset, they can identify defects earlier, reducing costly rework and ensuring development stays on track.

In today’s multi-platform environment, where apps must perform reliably across operating systems, devices, and browsers, integrating QA into Agile sprints transforms compatibility testing into a continuous effort. Rather than treating it as a final-stage checklist, teams can proactively detect and resolve issues such as layout breaks on specific devices or OS-related performance lags.

Maintain consistent communication and reporting

Regular updates between the internal team and the external testing partner help avoid misunderstandings and keep projects on track. Weekly syncs or sprint reviews ensure that testing progress, bug status, and priorities are clearly understood.

Use structured reports and dashboards to show key metrics like test coverage, defect severity, and retesting status. As a result, businesses get to assess product quality quickly without wading through technical detail.

Connecting the external team to tools already in use, such as Jira, Slack, or Microsoft Teams, helps keep communication smooth. Such integration improves collaboration and speeds up release cycles.

Foster a long-term partnership mindset

Onboard the external testing team with the same thoroughness as internal teams. Provide access to product documentation, user personas, and business goals. When testers understand the broader context, they can identify issues that impact user experience and business outcomes more effectively. This strategic partnership fosters a proactive approach to quality, leading to more robust and user-centric products.

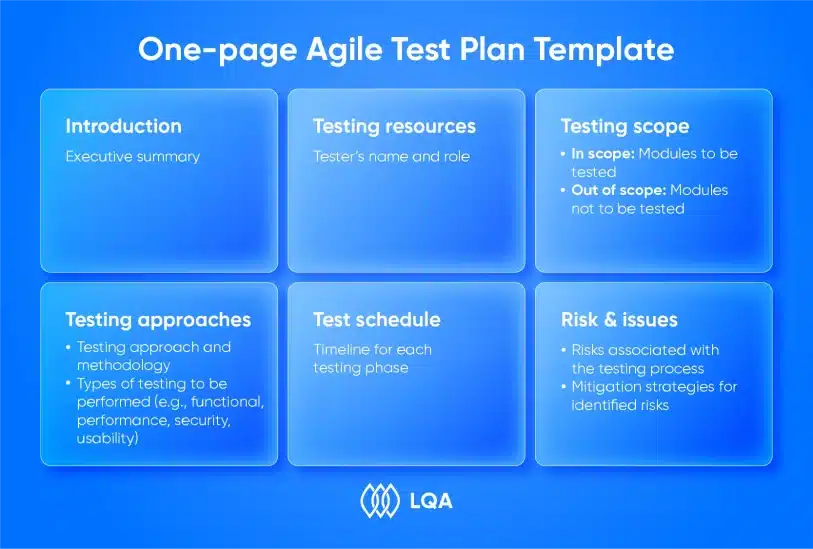

Check out the comprehensive test plan template for the upcoming projects.

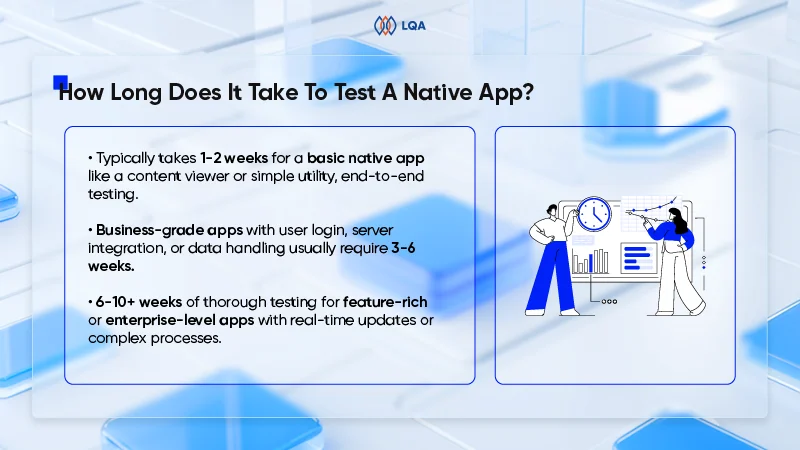

How Long Does It Take To Thoroughly Test A Native App?

Thoroughly testing a native mobile application is a multifaceted endeavor. Timelines vary significantly based on:

- App complexity (simple MVP vs. feature-rich platform)

- Platforms supported (iOS, Android, or both)

- Manual vs. automation mix

- Number of devices and testing cycles

How long does it take to test a native app?

For a basic native app, such as a content viewer or utility tool with limited interactivity, end-to-end testing might take between 1 and 2 weeks, focusing primarily on functionality, UI, and device compatibility.

However, most business-grade applications – those involving user authentication, server integration, data input/output, or performance-sensitive features – typically require from 3 to 6 weeks of testing effort.

For feature-rich or enterprise-level native apps, particularly those that involve real-time updates, background processes, or complex data transactions, testing can stretch from 6 to 10 weeks or more.

This is especially true when multi-platform coverage (iOS, Android, desktop) and a wide range of devices and OS versions are required. Native apps on mobile often need to account for fragmented hardware ecosystems, while native desktop apps may require deeper testing of system-level access, file handling, or offline modes.

Ultimately, the real question is not just “how long,” but how early and how strategically QA is integrated. Investing upfront in test strategy, automation, and risk-based prioritization often results in faster releases and lower post-launch costs, making the testing timeline not just a cost center but a business enabler.

FAQs About Native App Testing

- What is native app testing, and how is it different from web or hybrid testing?

Native app testing focuses on apps built specifically for a platform (iOS, Android, Windows) using platform-native code. These apps interact more directly with device hardware and OS features, so testing must cover areas like performance, battery usage, offline behavior, and hardware integration. In contrast, web and hybrid apps run through browsers or webviews and don’t require the same depth of device-level testing.

- How do I know if outsourcing native app testing is right for my business?

Outsourcing is a good choice when internal QA resources are limited or when there’s a need for broader device coverage, faster turnaround, or specialized skills like security or localization testing. It helps reduce time-to-market while controlling costs, especially during scaling or high-volume release cycles.

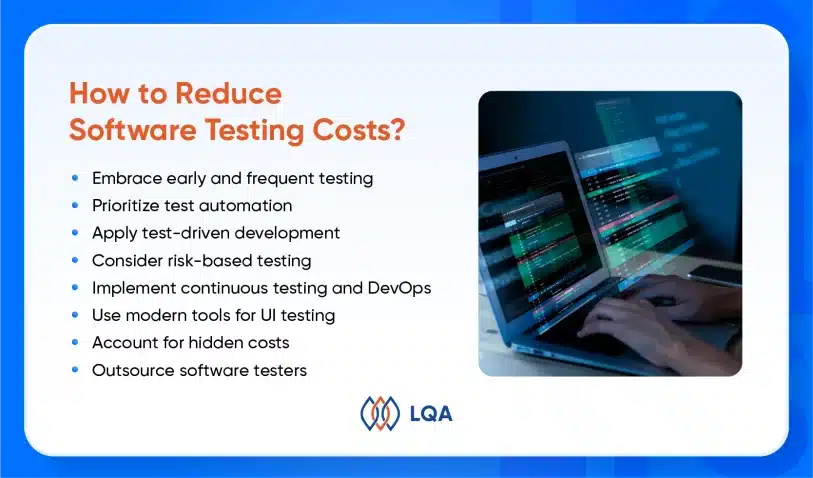

- How much does it cost to outsource native app testing?

While specific figures for outsourcing native app testing are not universally standardized, industry insights suggest that software testing expenses typically account for 15% to 25% of the total project budget. For instance, if the total budget for developing a native app is estimated at $100,000, the testing phase could reasonably account for $15,000 to $25,000 of that budget. This range encompasses various testing activities, including functional, performance, security, and compatibility testing.

Final Thoughts on Native App Testing

By understanding what native app testing entails, weighing the pros and cons of different approaches, and applying best practices when working with external testing teams, businesses can make smart decisions. More importantly, companies will be better equipped to decide if outsourcing is the right path and how to do it in a way that maximizes efficiency.

Ready to get started?

LQA’s professionals are standing by to help make application testing a snap, with the know-how businesses can rely on to go from ideation to app store.

With a team of experts and proven software testing services, we help you accelerate delivery, ensure quality, and get more value from your testing efforts.

Contact us today to get the ball rolling!