Data Annotation: Best Practices for Project Management

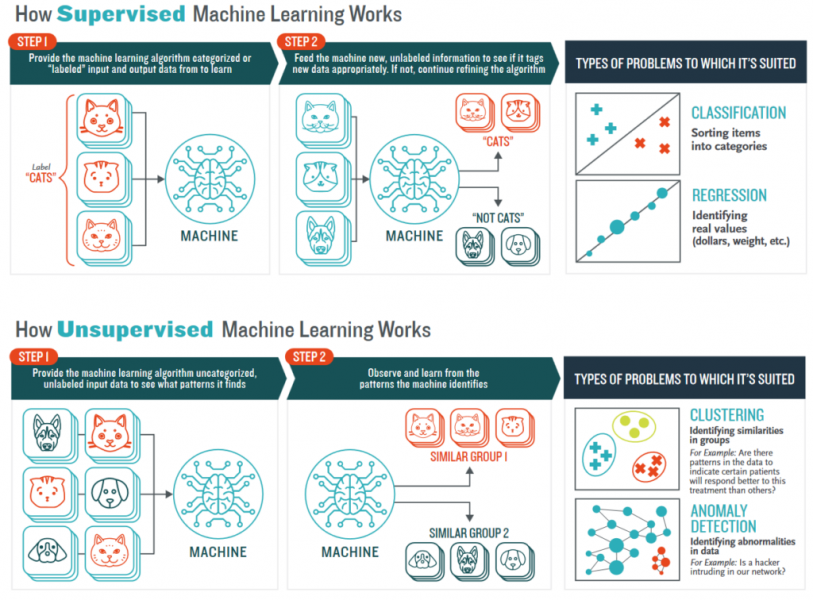

How can we obtain the highest quality in our Artificial Intelligence/Machine Learning? The answer is high-quality training data, according to many scientists. But to ensure such high-quality work might not be that easy. So the question is “What is the data annotation best practices?”

One might think of data annotation as mundane and tedious work that requires no strategic thinking. Annotators only have to work on their data and then submit them!

However, the reality speaks differently. The process of data annotation might be lengthy and repetitive, but it has never been easy, especially for managing annotation projects. In fact, many AI projects have failed and been shut down, due to the poor quality of training data and inefficient management.

In this article, we will guide you through the Data annotation best practices to ensure data labelling quality. This guide follows the steps in a data annotation project and how to successfully and effectively manage the project:

- Define and plan the annotation project

- Managing timelines

- Creating guidelines and training workforce

- Feedback and changes

1. Define and plan the annotation project

Every technological project needs to start with the defining and planning step, even for a seemingly easy task like data annotation.

First off, there needs to be the clear clarification and identification of the key elements in the project, including:

- The key stakeholders

- The overall goals

- The methods of communication and reporting

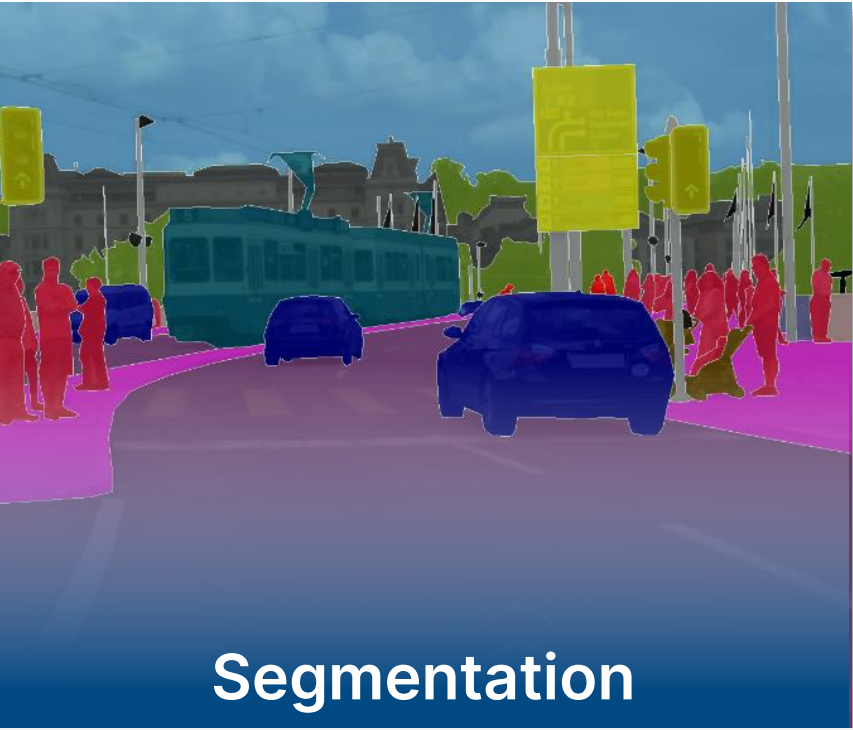

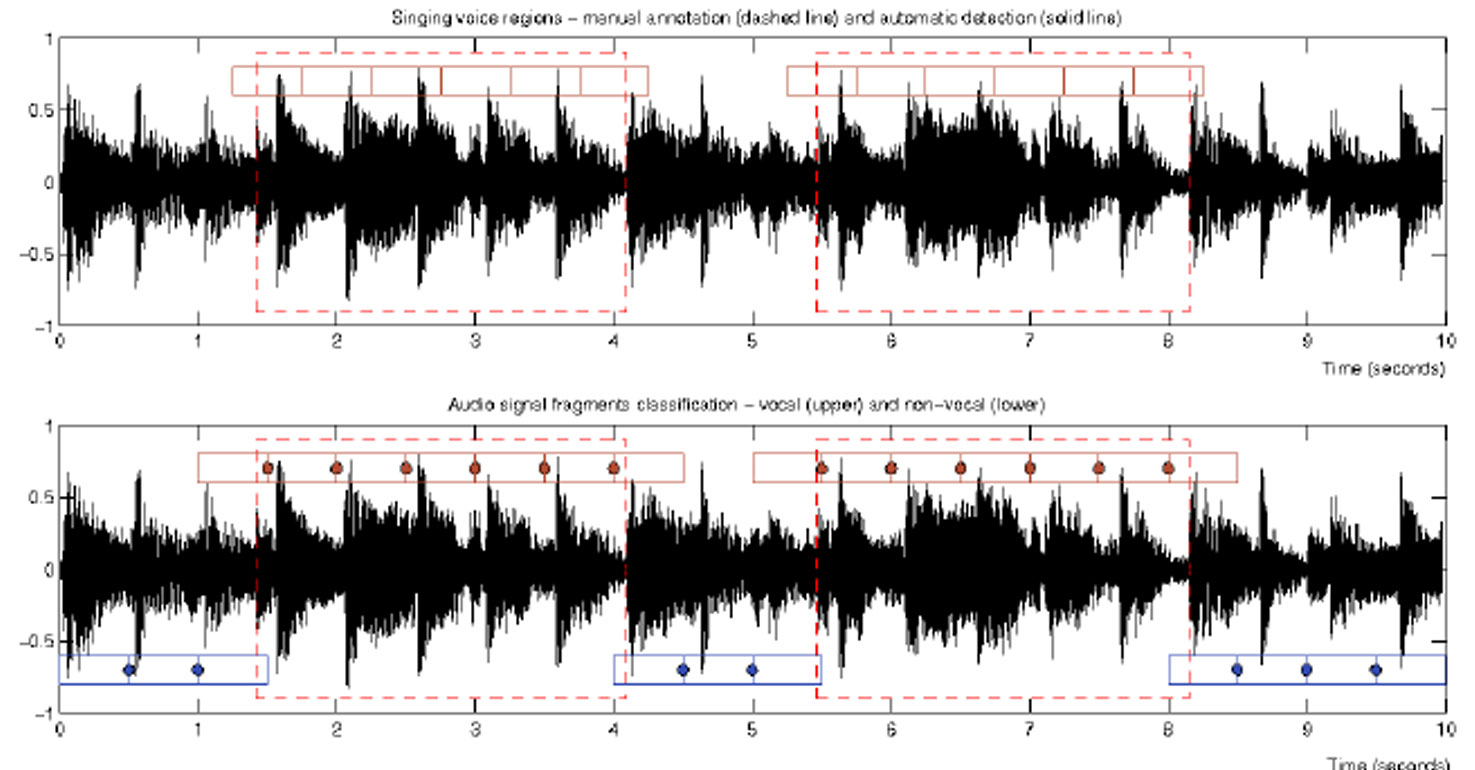

- The characteristics of the data to be annotated

- How the data should be annotated

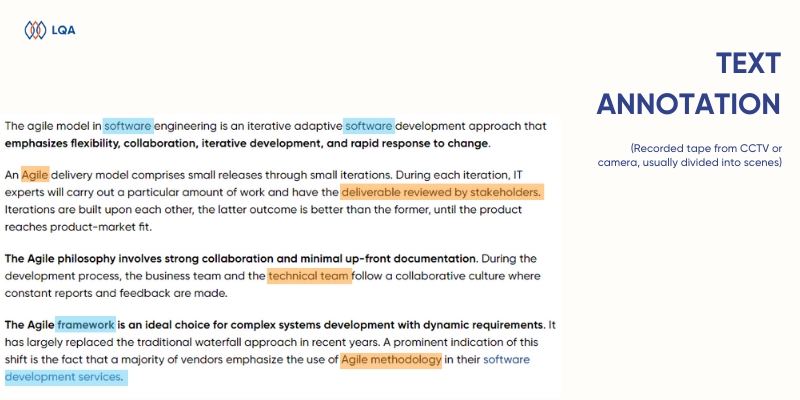

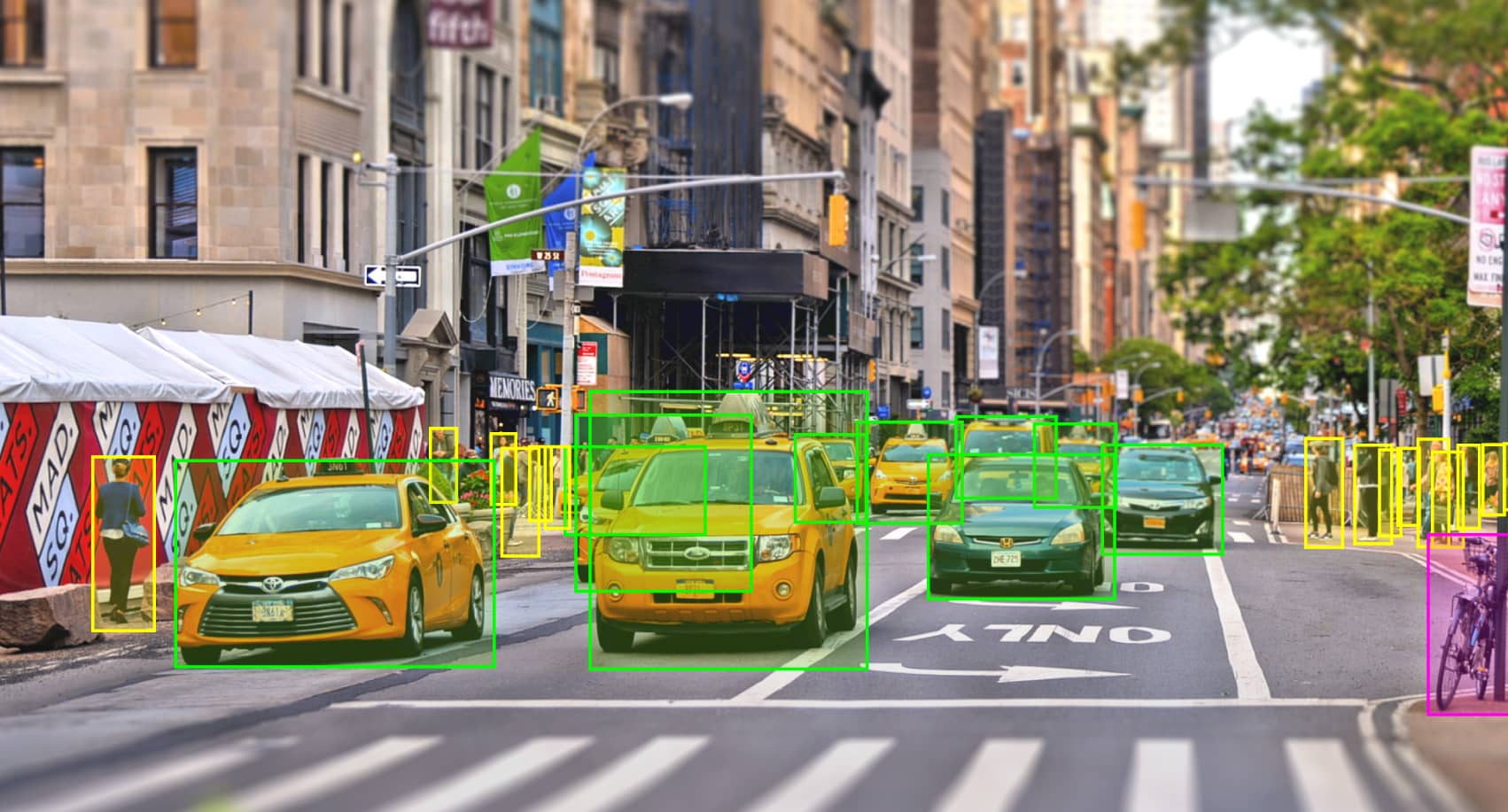

Data annotation best practices – Training datasets

The key stakeholders

With the key stakeholders, there are mainly three of them:

- The project manager of the whole AI product: It is a must for project managers to determine are the ones to set out the practical application of the project, and how what kinds of data need to be put into the AI/ML model.

- The annotation project manager: His/her main duties include the day-to-day functions, and they will be responsible for the quality of the outputs. They will work directly with the annotators and conduct necessary training. When you have an annotation project manager, make sure that they have subject matter expertise so that they can start working on the project right away.

- The annotators: For the annotators, it is best that they are well-trained of the labeling tools (or the auto data labeling tool).

After identifying the stakeholders, you can easily set out their responsibilities. For example, the overall quality of the datasets will be the responsibility of the annotation project manager, but how the data is used in the AI/ML model will be solely on the project manager.

Each of these stakeholders has their own job, their own skill sets and their valuable perspective to achieve the best result. If your project lacks any of these stakeholders, it can be at risk of poor performance.

The overall goals

For any data annotation project, you need to know what you want as an output, hence developing the appropriate measures to achieve it. With the key project stakeholders, the project manager can put all of their input together and come up with the overall goals.

Data annotation best practices – Overall goals

To come up with the overall goals, you need to answers to these:

- The desired functionality

- The intended use cases

- The targeted customers

Once the overall goals are clarified, the next step of the annotation project will be more projected and well-defined, making the working process easier.

The methods of communication and reporting

It is quite all over the place when it comes to communication and reporting in data annotation projects. Communication in software development seems to be much more emphasized than in data annotation, but it doesn’t mean that the communication is of less significance.

Maybe the communication among the annotators is thin but between the annotators and project manager or the annotation manager, it is not the case. In fact, they need to constantly keep track of each other’s work to ensure the overall quality.

Therefore, the use of communication platforms and reporting app is very important.

- For communication, the project manager can choose from Scrum, Kanban or the Dynamic Systems Development Method.

- For reporting, the annotation manager needs to establish a system of controlling the quality and quantity of the annotators. The simplest, yet very effective way is through Excel or Google Spreadsheet.

The characteristics of the data to be annotated

The stakeholders need to understand the following:

- The features

- The limitations

- The patterns

With the initial understanding of the data, the next vital step is to sample for data annotation and whether any pre-processing of the dataset is needed

With any project that has a big sum of data, the annotation manager needs to break down the project into small parts for trial. With microprojects like this, the annotators don’t necessarily need the subject matter expertise to carry out.

Check out: Data Annotation Guide

2. Managing timeline

The timeline is another important feature that needs to be well taken care of. Every stakeholder will have to be involved in this process to define the expectations, constraints and dependencies along the timeline. These features can have a great impact on the budget and the time spent on the project.

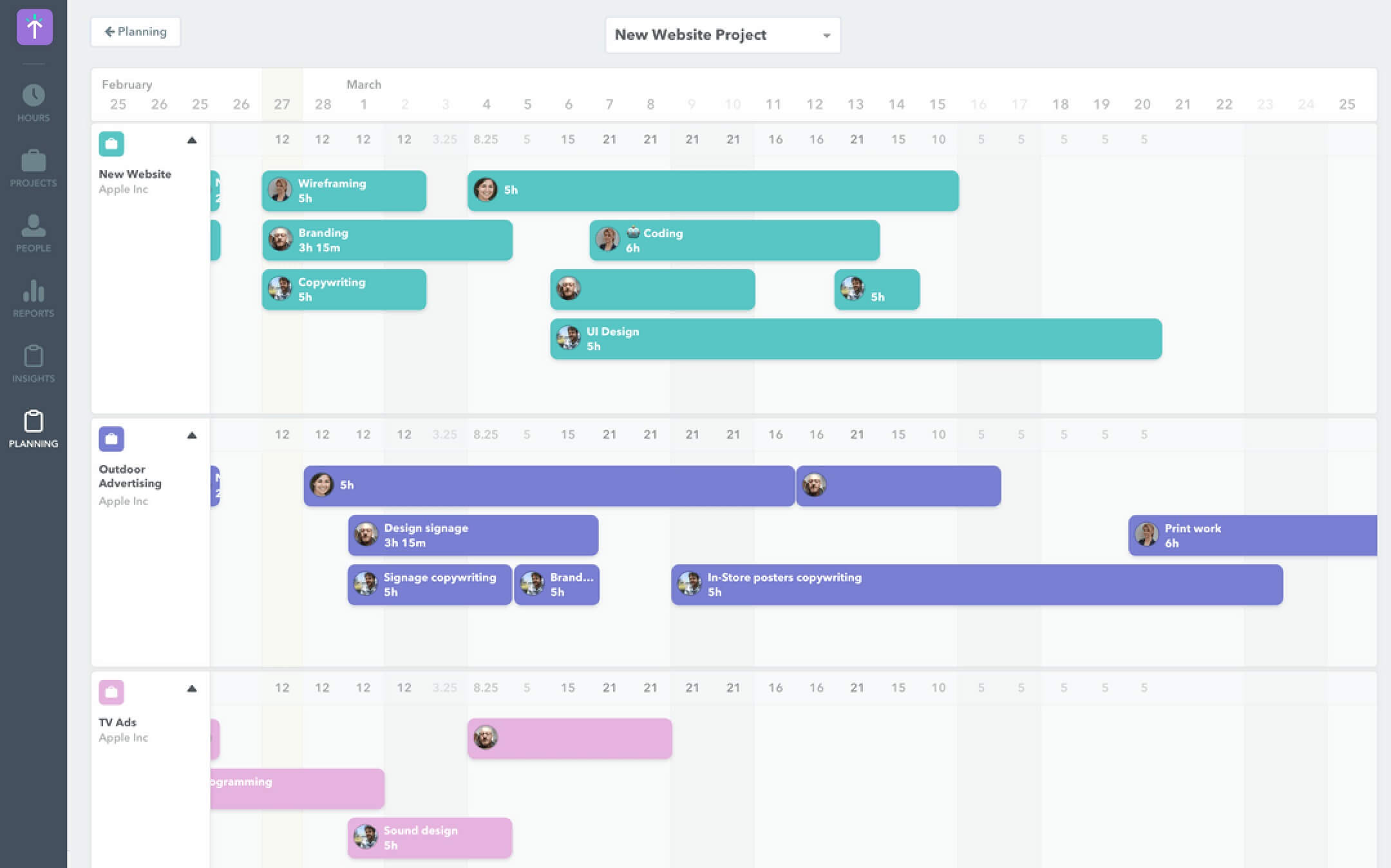

Data annotation best practices – Managing timeline

There are some ground rules for the team to come up with a suitable timeline:

- All stakeholders have to be involved in the process of creating a timeline

- The timelines should be clearly stated (the date, the hour, etc.)

- The timelines must also include the time for training and creating guidelines.

- If there are any issues or uncertainties related to the data and the annotation process should be communicated to all stakeholders and documented as risks, where applicable.

- In this process, the timeline will be decided as follows:

- For the product managers, they must take into account the overall requirements of the project. What are the deadlines? What are the requirements and the user experience? Since the product managers don’t directly get involved in the data annotation process, they need to know or be educated about the complexity of the project, hence setting reasonable expectations.

- For the annotation managers, they need to know the project’s complexity to allocate the annotators need to know to do the project. What is the subject matter knowledge required with this project? How many people are required to do this? How do they ensure the high-quality and follow the timeline effectively? These are the questions that they need to answer.

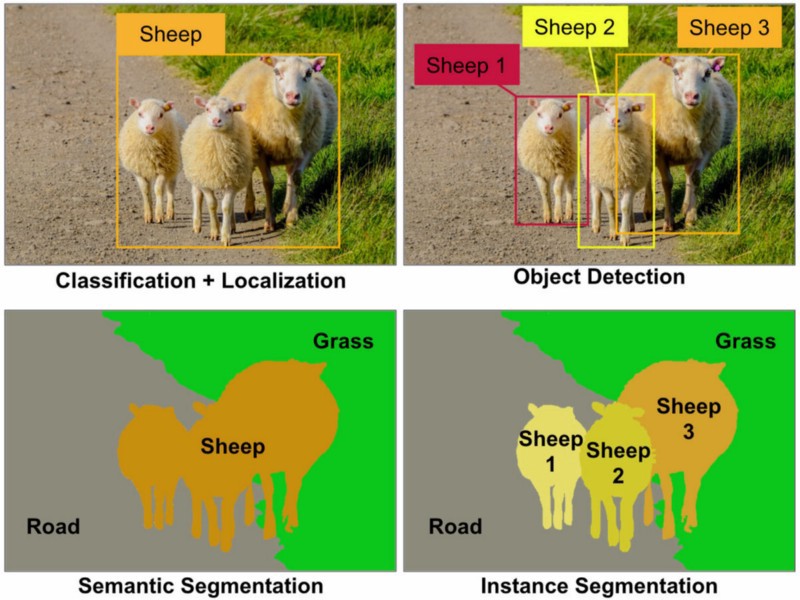

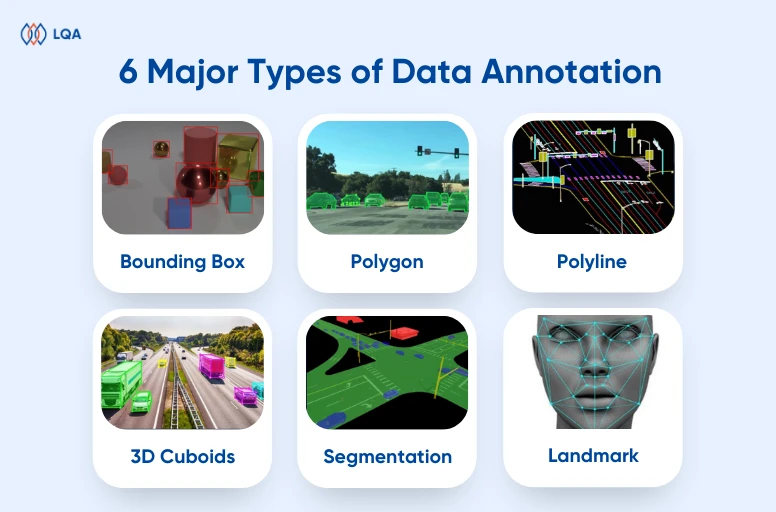

- For the data annotators, they need to clarify what type of data they’re working on, what types of annotation and the knowledge required to do the job. If they don’t have them, it is a must that they are trained with an expert.

Check out: Data Annotation Working Process

3. Creating guideline and training workforce

Before stepping into the annotation process, you must consider the guideline and the training so that the team can achieve the highest quality in their work.

Creating guideline

For the data annotated to be consistent, the team needs to come up with a full guideline for one particular data annotation project.

This guideline should be built based on all of the information there is about the project. If you have similar projects like this, you should also write the new guideline based on it.

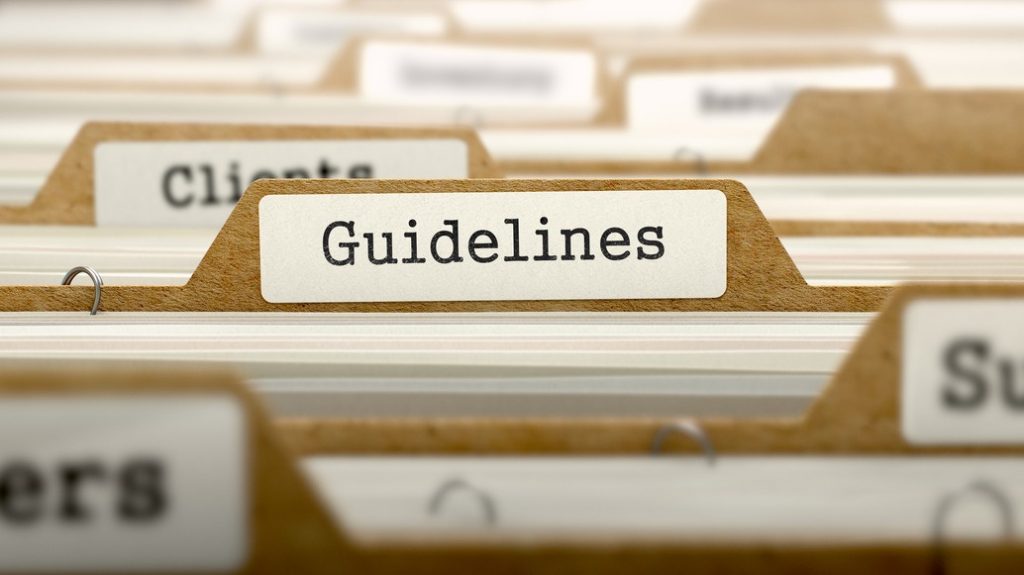

Data annotation best practices – Creating guidelines

Here are some ground rules for creating a guideline in data annotation:

- The annotation project manager needs to put the complexity and the length of the project in mind. Especially with the complexity of the project will affect the complexity of the guideline.

- Both tool and annotation instructions are to be included in the guideline. Introduction to the tool and how to do it must be clearly stated.

- There must be examples to illustrate each label that the annotators have to work with. This helps the annotators understand the data scenarios and the expected outputs more easily.

- Annotation project managers should consider including the end goal or downstream objective in the annotation guidelines to provide context and motivation to the workforce.

- The annotation project manager needs to make sure that the guideline is consistent with other documentation of the project so that there will be no conflict and confusion.

Training workforce

Based on the guideline that stakeholders have, the annotation team manager now can continue with the training easily.

Again, don’t think of the annotation as easy work. It can be repetitive but also requires much training and subject matter knowledge. Also, training for the data annotators requires attention to many matters, including:

- The nature of the project: Is the project complicated? Does the data require subject matter knowledge?

- The project’s time frame: The length of the project will define the overall time spent on training

- The resources of the individual or group managing the workforce.

After the training process, the annotators are expected to adequately understand the project and produce annotations that are both valid (accurate) and reliable (consistent).

Data annotation best practices – Training workforce

During the training process, the annotation manager needs to make sure that:

- The training is based on one guideline to ensure consistency.

- If there is a case of new annotators joining the team when the project has already started, the training process will be done again, either through direct training or training in recorded video.

- If there is any question, all of them have to be answered before the project has started.

- If there is confusion or misunderstanding, it should be addressed right at the beginning of the project to avoid any errors later.

- The matter of quality output must be clearly defined in the training process. If there is any quality assurance method, it should be announced to the annotators.

- Written feedback is given out to the data annotators so they know what metrics they are going to work on.

During the annotation process, the quality of the training datasets relies on how the annotation manager drives the annotation team. To ensure the best result, you can take the following measures:

- After the requirements of the project are clarified, you need to set reasonable targets and timelines for the annotators to achieve.

- Every estimation and pilot phase needs to be done beforehand.

- You need to define the quality assurance process and which staff to be involved (possibly QA staff)

- The annotation manager needs to address the collaboration between the annotators. Who will help who? Who will cross-check whose work?

- You divide the project into smaller phases, then give feedbacks to erroneous work.

- The Annotation manager will be the one who ensures technical support for the annotation tool throughout the annotation process to prevent project delay. If there is to be any problem that can’t be solved singlehandedly, he/she needs to ask the tool provider or the project manager for viable solutions.

4. Feedback and changes

After the annotation is complete, it is important to assess the overall outcome and how the team did the work. By doing this, you can confirm the validity and reliability of the annotations before submitting them to another team or clients.

If there were any additional annotations, you need to take another look at the strategic adjustments to the project’s definition, training process, and workforce, so the next round of annotation collection can be more efficient.

It is also very important to implement processes to detect data drift and anomalies that may require additional annotations.

How Lotus QA manager our annotation projects

To ensure the high quality on your training datasets is not easy. Actually, it is quite a troublesome process to allocate the work, do the training and give feedback. Maintaining such a large team of the project manager, annotation manager and annotators can take up many resources and effort.

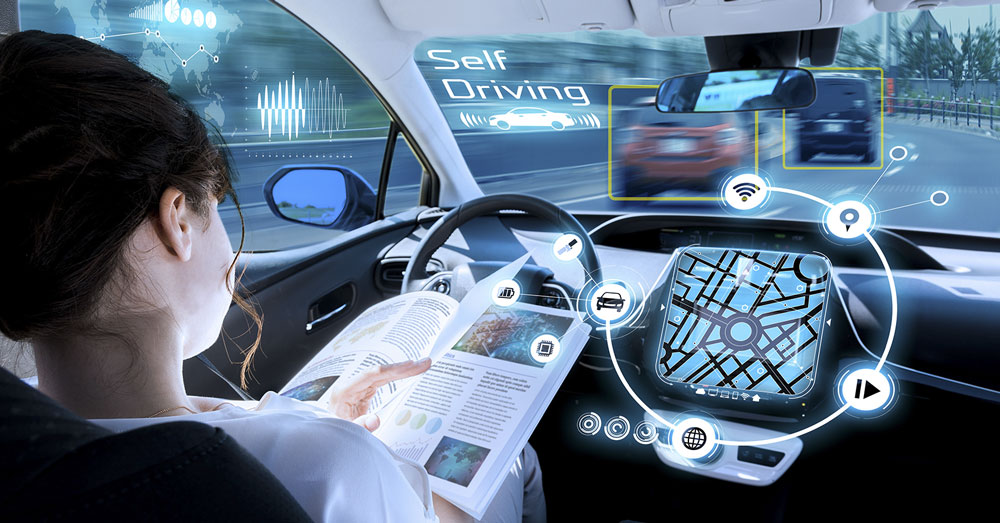

LQA is one of the top 10 Data labelling companies in Vietnam with a team of 6-year experience, working in multiple annotation projects and many data types. We also have a strong team of data annotation project managers and QA staff to ensure the quality of our outputs. From agriculture to fashion, from sports to automobile projects, we’ve done it all. Working with LQA, you can rest assured that your data is in the right hand. Don’t hesitate to contact us if you want to know more about managing data annotation projects.

- Website: https://www.lotus-qa.com/

- Tel: (+84) 24-6660-7474

- Fanpage: https://www.facebook.com/LotusQualityAssurance