Unveil Top 5 Automation Testing Challenges And Optimal Solutions

Automation Testing is a testing technique utilizing automated testing tools to implement tests on multiple platforms. This is considered an efficient software testing method coming with high accuracy and low labor consumption. Still, some obvious and hidden problems do exist behind.

Top 5 automation testing challenges that enterprises have to face:

- High initial investment cost

- High demand for necessary skills

- Complicated maintenance

- Complicated execution

- Difficulties in lab management

This article will dig into these 5 common challenges facing automation testing and solutions to minimize their effects on enterprises.

Top 5 Automation Testing Challenges

1. High initial investment cost

First, let’s take a closer look at the initial investment cost of automation testing. To estimate and calculate the Return of Investment, the first thing you should consider is the possible initial investment cost for an automation testing system, including:

- Cost for human resources

- Cost for automation tools

Cost for human resources

The automation testing process involves the utility of Automated Testing Tools and Automated Testing Engineers. These people are also called Software Development Engineers in Test.

When comparing the Non-Technical Testers and those with Industrial Knowledge, the second ones are far more expensive.

Also, the overall In-Demand positions for software testers are plummeting, specifically for automation testers, resulting in higher recruitment competitiveness and higher budgets for talent acquisition.

Talent acquisition poses a challenge in Automation Testing

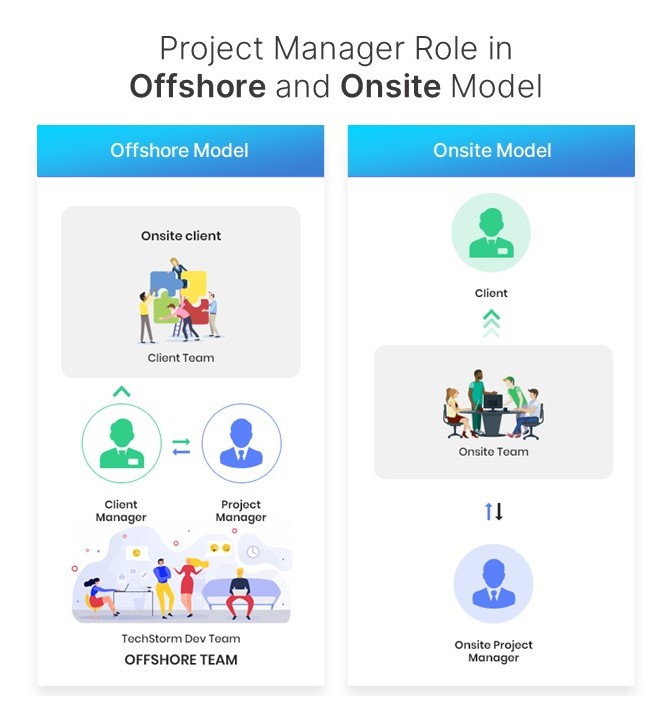

The dilemma of human resources lies upon the two forces, which are the Testing Engineers fluent in different coding languages and the Domain Expertise with non-technical knowledge and experience in coding. Whether the testers are onshore or offshore, the cost for those with coding skills is much higher than that of the non-technical testers.

To put it differently, The Non-technical Testers with knowledge of the industry are the trade-off for the Automated Testing Engineers.

Solutions: The problem of high cost for automation test engineers could be handled in two ways:

- Training current employees: This is a budget-friendly way to overcome challenges in automation testing. Still, it often takes many months for an Automated Testing Engineer to really hit it off.

- Outsourcing automated testing engineers: To avoid spending months on training and coaching, many firms have chosen the solution of outsourcing automated testing engineers.

Cost for automation tools

There are two main types of automation testing tools: open-source and commercial testing tools. While the open-source testing frameworks, also called free testing tools (such as Selenium, Katalon, etc.) are free to access, the commercial ones require a payment based on licenses or the number of users.

Still, there are “hidden costs” no matter whether you’re using an open-source testing tool or a licensed one. As for the commercial framework, the payments are obviously the license and development costs. At the same time, free automation testing tools maybe not be enough for your business needs.

Solution: To reduce the cost of automation tools, you should first clarify your requirements and check if free tools can handle your needs. If not, go to a commercial solution that can benefit you the most in the long run.

2. Demand for high skills

The myth of automation testing is that it is always wrongly deemed as “simple”, “easy” or “quick”. In fact, the test execution including test design, writing test scripts, test maintenance and technical issue resolution, requires such high automation knowledge and solid grasp of automation tools that the salary range for automation testing engineers is very high.

Typically, automation testing engineers are required to fulfill the job requirements in terms of automation frameworks, prominent programming skills, and solid knowledge of the available automation tools. The strategic skillsets of identifying the appropriate frameworks, applying the right tools, and coordinating the testing process are vital for any automation testing engineer.

Solutions: Companies can weigh the pros and cons of in-house or outsourcing teams for automation testing. These necessary skills above can be acquired through either in-house training or automation testing vendors.

3. Complicated maintenance

As automation testing is the hot issue of quality assurance services, its maintenance is imperative for the overall efficiency of the testing process. Throughout the whole testing process, once a test case/script is written, it always necessitates maintenance, which is required every time the software application or features change.

Test Maintenance is a major challenge in Automation Testing

The scope of test maintenance varies in accordance with the complication level of the changes themselves. Whether it is a functional or non-functional feature update in the application, viable test cases are to be executed prior to release. As in the comparison of Automation Testing vs. Manual Testing, Automation Testing has different maintainability levels, entailing high programming skills.

Solutions:

- Modular test framework

By applying a modular framework for automated tests, the testing execution is divided into smaller pieces with different functions. Each function of the update is tested, making it easier for automation testing engineers to locate the code that needs updating.

- A separate test for each verification point

There might be a possibility that test developers of automation testing can create numerous verification points. However, the test scripts would hold the crux of complexity, making it difficult for anyone, other than the coder, to edit. With separate test for each verification point, it is easier for the team to update.

- Continuous Integration and Continuous Delivery

Continuous Integration and Continuous Delivery (CI/CD) are the methods in which the minor details/changes are well-attended. With these being applied, the development and testing process is faster and more efficient.

The implementation of CI/CD equals the robust reporting of test scripts and test results. If bugs are to be leaked into other environments, the CI/CD pipeline can help you with the testing process in identifying which part needs updating.

4. Complicated execution

During execution, automation scripts are run with input test data. Once execution is finished, detailed test reports will be available. From these reports, appropriate and viable changes and updates can be made.

Automation Testing Execution invokes some difficulties in:

- Test approach selection

- Automation testing tool selection

- Communication and Collaboration

High Demand in Test Approach Selection

An appropriate automation test approach plays a key role in the effective result of a project.

At the management level, you certainly know what and how to make the test approach; however, to make this approach in test automation is another issue.

- The first difficulty is making the long-run automation process associated with the lifespan of a product. For example, the average cycle of a desktop application is common from 12-18 months to over 15 years. Therefore, the test approach needs to be able to execute the whole process of the software’s life span.

- Secondly, the test approach has to make sure that when products change or update, it is capable of identifying and keeping up with these changes without human intervention. Taking the example of a mobile application, the approach can’t be “one size fits all” because the user requirement rapidly changes.

Definitely, it is hard to address these difficulties on the test approach, facing the challenge of building an effective long-run-oriented framework at the beginning.

Solution: Identify the following features:

- Testing process

- Testing levels

- Testing types

- Automation tools applicable

- HR allocation with different roles and responsibilities

Diverse choices of automation testing tools

One of the automation testing challenges is to select the right testing tool among a variety of comprehensive test tools in the market. There are open-source and commercial tools, and there are various types within each category. Each tool is suitable for particular scenarios, such as Selenium is an open-source tool that requests more programming skills from testers.

Tools for Automation Testing

Particularly, the right tool has to match many factors such as the long-term orientation of the project, framework, output of the project, the requirement of clients, and the skill of the tester team. So, if you pick the wrong or inappropriate tool, the whole process can be failed from the start. Indeed, open-source tools often require a higher level of coding skill than commercial tools.

Solution:

Our expert testers recommend the following steps to choose tools:

- Defining a set of tool requirements criteria

- Reviewing the chosen tools

- Conducting a trial test with the tools

- Making the final decision whether you use these tools or not?

Barriers in communication and collaboration

In comparison with manual testing and development, automated tests actually require more collaboration. Once the misunderstanding from the start is disregarded or neglected, the process can be messy.

From the beginning, the must-have is good interaction between the delivery team and customer to analyze and understand completely the input and output of the project.

When it comes to the test strategy, the tester team needs to communicate with project managers about making a plan, scope, and framework.

The fact that automation testers not only talk with developers for understanding code but also manual testers about test cases, and infrastructure engineers about integration to build up the final product.

Solution: Establishing a collaborative environment, such as a specific point of contact in each process, clear expectation and the responsibility of members will help everyone to deliver the information fast and conveniently. Plus, active involvement and a transparent framework will develop your unique company culture.

5. Difficulties in lab management

A device lab that can match the scope of automation testing has to be a big one. As some of the teams prefer building and maintaining their own device labs, this can be quite extravagant.

For every operating system, there are different versions of browsers and different devices. To fully capture and exploit the utility of this device lab, the up-to-date feature and lab maintenance has to be assured, hence the high cost.

Besides the spiking cost of having your own lab, lab management also poses a great challenge In today’s competitive world, teams need to have the ability to conduct a test at any time.

Your solution needs to provide open access to the lab and equips teams with the right tools to run and perform tests. This ultimately helps you be adaptable and keep pace with the new releases.

Solution: Cloud-Based Test Lab

Having a cloud-based lab is key for continuous testing unless there are some special testing requirements/scenarios with IoT, special networking (especially in the Telco space), etc.

To sum up, automation testing supports payoffs effectively and is a great method for companies to speed up progress; however, test automation can not completely replace human intelligence. We still need humans to make the orientation in the whole process of automation testing to avoid or reduce the challenges in automation testing.

Want to find the solutions for the automation testing challenges? Contact LQA now for FREE consultation with our specialists and experts.

- Website: https://www.lotus-qa.com/

- Tel: (+84) 24-6660-7474

- Fanpage: https://www.facebook.com/LotusQualityAssurance

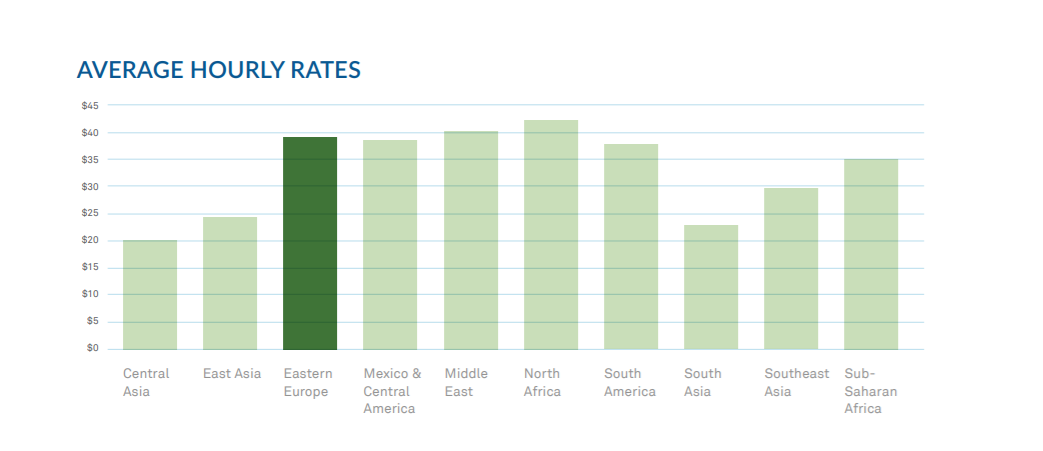

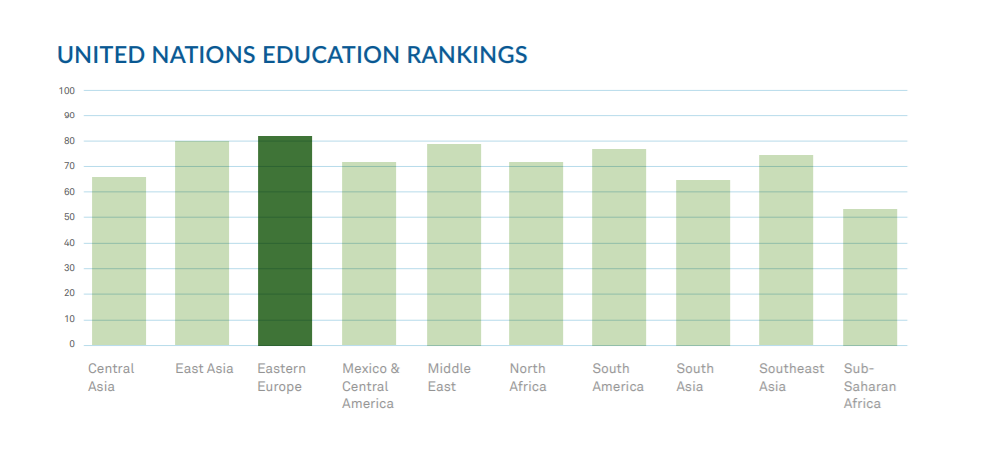

This is exacerbated by brain drain in many countries since many of the most experienced engineers may move on to other more promising regions. Eastern Europe suffered from a bit of brain drain in years past, but for the most part there are adequate opportunities available for software professionals and no need to leave to find work. The presence of so many seasoned professionals also feeds the IT ecosystem, which we’ll look into later in the report.

This is exacerbated by brain drain in many countries since many of the most experienced engineers may move on to other more promising regions. Eastern Europe suffered from a bit of brain drain in years past, but for the most part there are adequate opportunities available for software professionals and no need to leave to find work. The presence of so many seasoned professionals also feeds the IT ecosystem, which we’ll look into later in the report.