Automation Testing vs. Manual Testing: Which is the cost-effective solution for your firm?

The ever-growing development pace of information technology draws a tremendous need for better speed and flawless execution. So, Automation Testing vs. Manual Testing, which one to go with?

As a reflection of this, manual testing is still a vital part of the testing process, non-excludable from the field for some of its specific characteristics.

Both automation testing and manual testing pose great chances of cost-efficiency and security for your firms. In this article, the three underlying questions of what approach should be applied to your firm for the best outcome will be answered:

- What are the parameters for the comparison between the two?

- What are the pros and cons of automation testing and manual testing?

- Which kind of testing is for which?

What is automation testing?

Automation testing is a testing technique utilizing tools and test scripts to automate testing efforts. In other words, specified and customized tools are implemented in the testing process instead of solely manual forces.

Up until now, automated testing is considered a more innovative technique to boost the effectiveness, test coverage, and test execution speed in software testing. With this new “approach”, the testing process is expected to yield more test cases under a shorter amount of time and expand test coverage.

While it does not entirely exclude manual touch within the process, automation testing is a favorable solution for its cost-efficiency and limited human intervention. To put it in other words, automation testing requires manual efforts to make automation testing possible.

What is manual testing?

Manual testing, as in its literal meaning, is the technique in which a tester/a QA executes the whole testing process manually, from writing test cases to implementing them.

Every step of a testing process including test design, test report or even UI testing is carried out by a group of personnel, either in-house or outsourced.

In manual testing, QA analysts carry out tests one-by-one in an individual manner to find bugs, glitches and key feature issues prior to the software application’s launch. As part of this process, test cases and summary error reports are developed without any automation tools.

*Check out:

Why Manual to Automation Testing

6 steps to transition from Manual to Automation testing

Magnifying glass for differences between Automation Testing and Manual Testing

Simple as their names are, automation testing and manual testing seem easy to define and identify. However, when looking into the details of many aspects such as test efficiency, test coverage or the types of testing to be applied, it requires a meticulous and strategic understanding of the two.

The differences between automation testing and manual testing can be classified into the following categories:

- Cost

- Human Intervention

- Types of Testing

- Test execution

- Test efficiency

- Test coverage

1. Testing cost

For every company, when it comes to testing costs, it requires ubiquitous analysis to weigh in the cost and the benefit to choose a technique for testing.

With the evaluation of potential costs and revenue generated from the project itself, the analysis will determine whether the project needs automation testing or manual testing. As listed in this table, the initial investment, subject of investment and cost-efficiency will be addressed.

| Parameters | Automation Testing | Manual Testing |

| Initial Investment | Automated Testing requires a much larger initial investment to really hit it off. In change for that is the higher ROI yielding in the long run. The cost of automation testing is to cover Automation Testers and open-source automation tools, which can be quite costly. | The initial investment in Manual Testing lies in the cost for human resources and team setup. This may seemingly be economic at first with the cost of just 1/10 of that with automation testing, but in the long-term, the cost can pile up to huge expenses. |

| Subject of Investment | Investment is resourced for specified and customized tools, as well as automation QA engineers, who expect a much higher salary range when compared to those of manual testing. | Investment is poured into Human Resources. This can be either in-house recruitment or outsourcing, depending on your firm’s request and strategy. |

| Test volume for cost-efficiency | High-volume regression | Low-volume regression |

2. Human Resources Involvement

The whole picture of manual testing and automated testing does not simply indulge in the forces that execute the testing, whether it is a human being or a computer. However, there are some universal differences concerning human resources involvement.

| Parameters | Automation Testing | Manual Testing |

| User Interface observation | Automation Testing is basically executed by scripts and codes. Therefore, it cannot score on users’ interaction and opinions upon the software. Matters such as user-friendliness and positive customer experience are out of reach in this case. | The user interface and user experience are put into consideration. This process usually involves a whole team. |

| Staff’s programming skill requirement | Automation testing entails presets of Most In-Demand programming skills | Manual testing does not necessitate high-profile programming skills or even none. |

| Salary range | As estimated by Salary.com, the average Automation Test Engineer salary in the United States is approximately 4% higher than that of a regular Software Tester. | The salary range for manual testing is often lower because automated testing requires fluency in different coding languages, which manual testers are incapable of. |

| Talent availability | It is quite hard for talent acquisition with automation testing engineers. | It is easier for talent acquisitions as the training and coaching for manual testers are easier. |

3. Testing types

While software testing breaks down into smaller aspects such as performance testing or system testing, Automation Testing or Manual Testing are too general and broad an approach. For each type of testing, we have different approaches, either through an automated one or a manual one. In this article, the following types of testing will be disclosed:

- Performance Testing (Load Test, Stress Test, Spike Test)

- Batch Testing

- Exploratory Testing

- UI Testing

- Adhoc Testing

- Regression Testing

- Build Verification Testing

| Parameters | Automation Testing | Manual Testing |

| Performance Testing | Performance Testing, including Load Test, Stress Test, Spike Test, is to be tested with Automation Testing. | Manual Testing is not feasible with Performance Testing because of restricted human resources and lack of necessary skills. |

| Batch Testing | Batch Testing allows multiple test scripts on a nightly basis to be executed. | Batch Testing is not feasible with manual testing. |

| Exploratory Testing | As exploratory testing takes too much effort to execute, automation testing is impossible | Exploratory testing is for the exploration of the functionalities of the software under the circumstance that no knowledge of the software is required, so it can be done with manual testing |

| UI Testing | Automated Testing does not involve human interactions, so user interface testing is not feasible. | Human intervention is involved in the manual testing process, so it is proficient to test the user interface with manual testing. |

| Adhoc Testing | Adhoc testing is performed randomly, so it is definitely not for automation testing. | The core of Adhoc Testing is the testing execution without the instruction of any documents or test design techniques. |

| Regression Testing | Regression testing means repeated testing of an already tested program. When codes are changed, only automation testing can execute the test in such a short amount of time | Regression testing takes too much effort and too much time to test a changed code or features, so manual test is not the answer for regression testing. |

| Build Verification Testing | Due to the automation feature, Build Verification Testing is feasible. | It was difficult and time-consuming to execute the Build Verification Testing. |

4. Test execution

When it comes to testing execution, the expected results are correlated with the actual ones. The answer for “How are automated testing and manual testing carried out?” is also varied, based on the scenario of actual engagement, frameworks, approach, etc.

| Parameters | Automation Testing | Manual Testing |

| Training Value | Automation Testing results are stored in the form of automated unit test cases. It is easy to access and quite straightforward for a newbie developer to understand the codebase. | Manual Testing is limited to training values with no actual documentation of unit test cases. |

| Engagement | Besides the initial phase with manual testing, automation testing works mostly with tools, hence the accuracy and the interest in testing are secured. | Manual Testing is prone to error, repetitive and tedious, which may cause disinterest for testers. |

| Approach | Automated Testing is more cost-effective for frequent execution of the same set of test cases. | Manual Testing is more cost-effective for test cases with 1 to 2 test executions |

| Frameworks | Commercial frameworks, paid tools and open-source tools are often implemented for better outcomes of Automation Testing. | Manual Testing uses checklists, stringent processes or dashboards for test case drafting. |

| Test Design | Test-Driven Development Design is enforced. | Manual Unit Tests do not involve coding processes. |

| UI Change | Even the slightest change in the user interface requires modification in Automated Test Scripts | Testers do not encounter any pause as the UI changes. |

| Access to Test Report | Test execution results are visible to anyone who can log into the automation testing system. | Test execution results are stored in Excel or Word files. Access to these files is restricted and not always available. |

| Deadlines | Lower risk of missing a deadline. | Higher risk of missing a deadline |

Also read: Essential QA Metrics to Navigate Software Success

5. Test Efficiency

Test Efficiency is one of the vital factors for a key person to decide whether their firm needs automated testing or manual testing. The fast-paced development of information technology, in general, has yielded more demands in the field of testing, hence skyrocketing the necessity of automation testing implementation.

Regarding test efficiency, automation testing seems to be a more viable and practical approach for a firm with fast execution and sustainability.

| Parameters | Automation Testing | Manual Testing |

| Time and Speed | Automation Testing can execute more test cases in a shorter amount of time | Manual Testing is more time-consuming. It also takes much effort to finish a set of test cases. |

| Sustainability | Usually, test scripts are written in languages such as JavaScript, Python, or C#. These codes are reusable and quite sustainable for later test script development. Any change can be easily altered with decent skills of coding. | Manual testing does not generate any kind of synchronized documentation for further utility. On the other hand, the skillsets for coding are not necessary. |

6. Test Coverage

Error detection with Automation Testing is covered more thoroughly. Approaches like reviews, inspections, and walkthroughs are done without leaving anything behind. On the side of manual testing, the numbers of device and operating system permutations are limited.

What are the advantages and disadvantages of automation testing and manual testing?

Automation testing and manual testing both pose great opportunities for the testing industry. For each approach, you have to put many aspects into consideration. In general, automation testing and manual testing have their merits and demerits.

Automation Testing pros and cons

Advantages of automation testing

- Reduced repetitive tasks, such as regression tests, testing environments setup, similar test data input

- Better control and transparency of testing activities. Statistics and graphs about test process, performance, and error rates are explicitly indicated

- Decreased test cycle time. Software release frequency speeds up

- Better test coverage

Disadvantages of automation testing

- Extended amount of time for training about automation testing (tools guidance and process)

- The perspective of a real user being separated from the testing process

- Requirement for automation testing tools that can be purchased from third vendors or acquired for free. Each of them has its own benefits and drawbacks

- Poor coverage of the test scope

- Costly test maintenance due to the problem of debugging the test script

Manual Testing pros and cons

Advantages of manual testing

- Capability to deal with more complex test cases

- Lower cost

- Better execution for Ad-hoc testing or exploratory testing

- The visual aspect of the software, such as GUIs (Graphical User Interface) to be covered

Disadvantages of manual testing

- Prone to mistakes

- Unsustainability

- Numerous test cases for a longer time of test execution

- No chance of load testing and performance testing execution

Should you choose automation testing or manual testing?

For each approach of automation testing or manual testing, the question of what to choose for your firm cannot be answered without considering the parametric, the pros and cons of the two.

If your company is a multinational corporation with a vision for large-scale digital transformation, having huge revenue and funds for testing, automation testing is the answer for you.

Automation testing is sustainable in the long run, enabling your corporation to achieve a higher yield of ROI. It also secures your firm with better test coverage and test efficiency. Automation testing will be the best solution for regression testing and performance testing.

If your company seeks a cheaper solution with test case execution under a smaller scope, you should aim at manual testing for a smaller testing cost. User Interface, user experience, exploratory testing, Adhoc testing have to be done with manual testing.

All in all, although automation testing benefits many aspects of the quality assurance process, manual testing is of paramount importance. Please be noted that under the circumstance of frequent changes in test cases, manual testing is compulsory and inseparable from automation testing. The accumulation of the two will generate the most cost-effective approach for your firm.

For the best practices of testing, you should see the automation approach as a chance to perform new ways of working in DevOps, Mobile, and IoT.

Want to dig deeper into automation testing vs. manual testing and decide the one for your business? Contact LQA now for a FREE consultation with our specialists and experts.

- Website: https://www.lotus-qa.com/

- Tel: (+84) 24-6660-7474

- Fanpage: https://www.facebook.com/LotusQualityAssurance

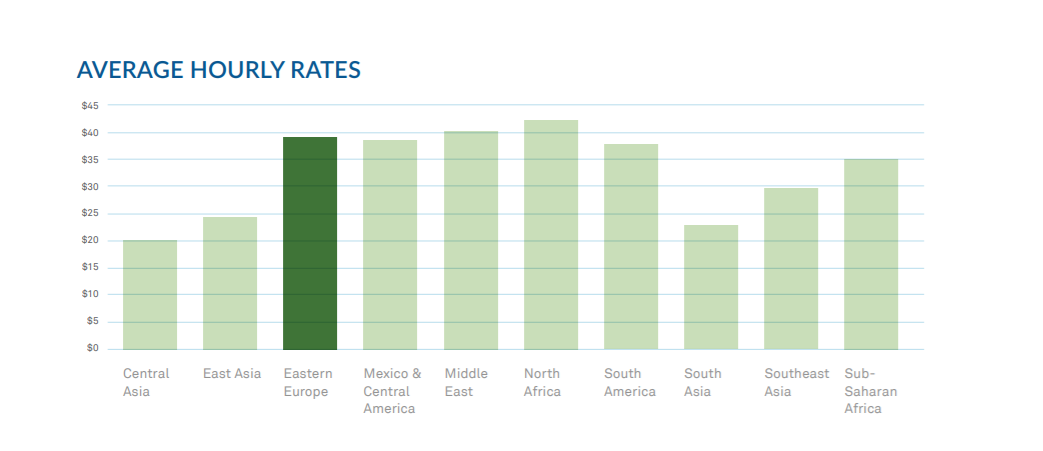

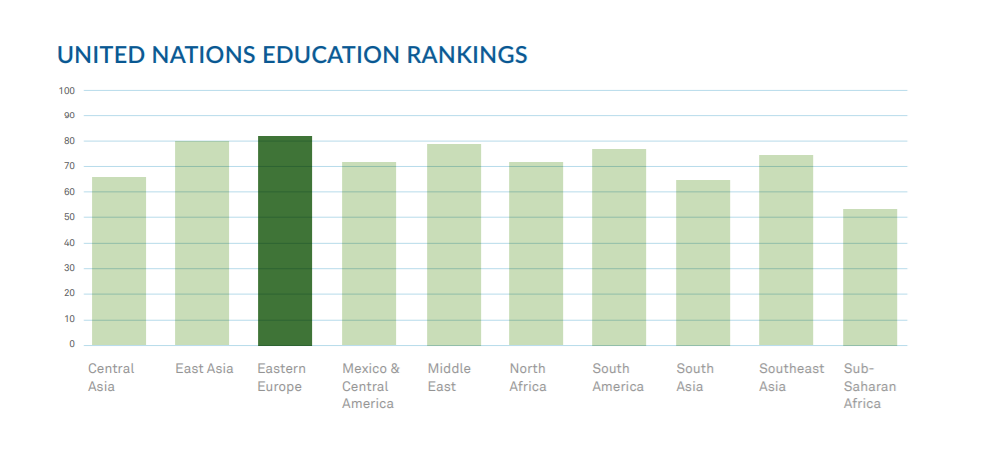

This is exacerbated by brain drain in many countries since many of the most experienced engineers may move on to other more promising regions. Eastern Europe suffered from a bit of brain drain in years past, but for the most part there are adequate opportunities available for software professionals and no need to leave to find work. The presence of so many seasoned professionals also feeds the IT ecosystem, which we’ll look into later in the report.

This is exacerbated by brain drain in many countries since many of the most experienced engineers may move on to other more promising regions. Eastern Europe suffered from a bit of brain drain in years past, but for the most part there are adequate opportunities available for software professionals and no need to leave to find work. The presence of so many seasoned professionals also feeds the IT ecosystem, which we’ll look into later in the report.